59% Of The Market Is Considering Deepseek

페이지 정보

작성자 Carlota 작성일25-02-01 21:37 조회9회 댓글0건본문

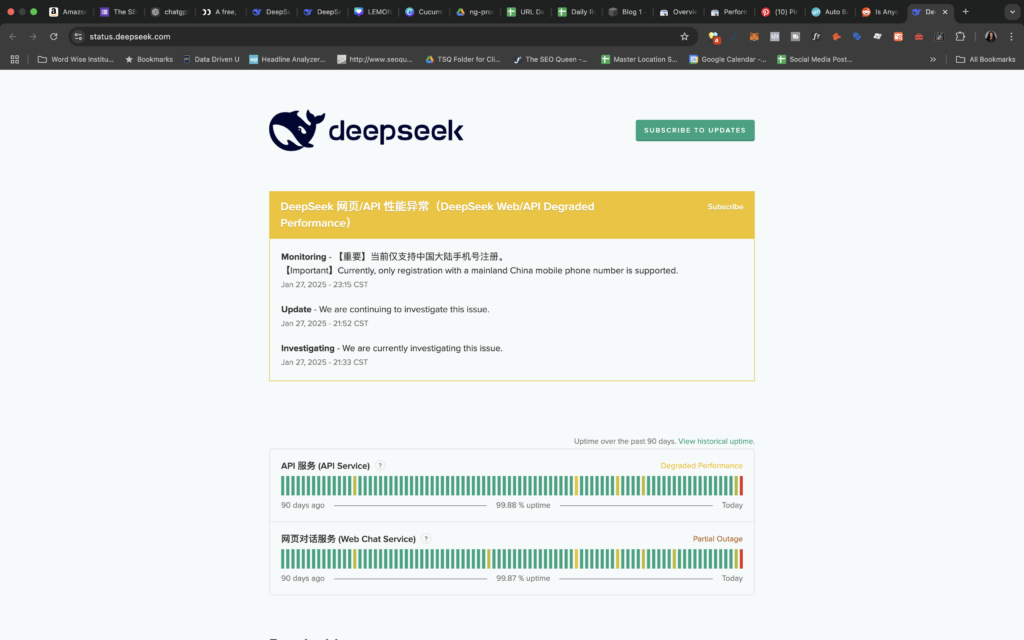

DeepSeek offers AI of comparable high quality to ChatGPT but is totally free to make use of in chatbot type. The really disruptive factor is that we should set moral guidelines to make sure the positive use of AI. To train the model, we needed a suitable drawback set (the given "training set" of this competitors is just too small for high-quality-tuning) with "ground truth" options in ToRA format for supervised high quality-tuning. But I also read that for those who specialize models to do much less you may make them great at it this led me to "codegpt/deepseek ai-coder-1.3b-typescript", this specific model could be very small in terms of param depend and it is also based mostly on a deepseek-coder model but then it is advantageous-tuned utilizing solely typescript code snippets. If your machine doesn’t help these LLM’s nicely (except you may have an M1 and above, you’re in this category), then there is the following alternative resolution I’ve discovered. Ollama is essentially, docker for LLM fashions and allows us to shortly run varied LLM’s and host them over commonplace completion APIs regionally. On 9 January 2024, they released 2 DeepSeek-MoE models (Base, Chat), every of 16B parameters (2.7B activated per token, 4K context size). On 27 January 2025, DeepSeek limited its new person registration to Chinese mainland telephone numbers, email, and Google login after a cyberattack slowed its servers.

DeepSeek offers AI of comparable high quality to ChatGPT but is totally free to make use of in chatbot type. The really disruptive factor is that we should set moral guidelines to make sure the positive use of AI. To train the model, we needed a suitable drawback set (the given "training set" of this competitors is just too small for high-quality-tuning) with "ground truth" options in ToRA format for supervised high quality-tuning. But I also read that for those who specialize models to do much less you may make them great at it this led me to "codegpt/deepseek ai-coder-1.3b-typescript", this specific model could be very small in terms of param depend and it is also based mostly on a deepseek-coder model but then it is advantageous-tuned utilizing solely typescript code snippets. If your machine doesn’t help these LLM’s nicely (except you may have an M1 and above, you’re in this category), then there is the following alternative resolution I’ve discovered. Ollama is essentially, docker for LLM fashions and allows us to shortly run varied LLM’s and host them over commonplace completion APIs regionally. On 9 January 2024, they released 2 DeepSeek-MoE models (Base, Chat), every of 16B parameters (2.7B activated per token, 4K context size). On 27 January 2025, DeepSeek limited its new person registration to Chinese mainland telephone numbers, email, and Google login after a cyberattack slowed its servers.

Lastly, should leading American academic establishments proceed the extremely intimate collaborations with researchers associated with the Chinese authorities? From what I've read, the first driver of the associated fee financial savings was by bypassing expensive human labor prices related to supervised coaching. These chips are pretty massive and each NVidia and AMD need to recoup engineering costs. So is NVidia going to lower prices due to FP8 training prices? DeepSeek demonstrates that competitive models 1) do not want as much hardware to prepare or infer, 2) might be open-sourced, and 3) can utilize hardware other than NVIDIA (in this case, AMD). With the flexibility to seamlessly combine multiple APIs, together with OpenAI, Groq Cloud, and Cloudflare Workers AI, I've been capable of unlock the complete potential of these powerful AI fashions. Multiple totally different quantisation formats are offered, and most customers only need to choose and obtain a single file. Regardless of how a lot money we spend, in the long run, the benefits go to the widespread users.

Briefly, DeepSeek feels very much like ChatGPT without all of the bells and whistles. That's not a lot that I've discovered. Real world test: They examined out GPT 3.5 and GPT4 and located that GPT4 - when outfitted with instruments like retrieval augmented information generation to access documentation - succeeded and "generated two new protocols utilizing pseudofunctions from our database. In 2023, High-Flyer began DeepSeek as a lab devoted to researching AI instruments separate from its monetary enterprise. It addresses the constraints of previous approaches by decoupling visible encoding into separate pathways, whereas still utilizing a single, unified transformer architecture for processing. The decoupling not only alleviates the battle between the visual encoder’s roles in understanding and era, but also enhances the framework’s flexibility. Janus-Pro is a unified understanding and era MLLM, which decouples visual encoding for multimodal understanding and technology. Janus-Pro is a novel autoregressive framework that unifies multimodal understanding and technology. Janus-Pro is constructed primarily based on the DeepSeek-LLM-1.5b-base/DeepSeek-LLM-7b-base. Janus-Pro surpasses earlier unified model and matches or exceeds the performance of process-specific models. AI’s future isn’t in who builds one of the best models or applications; it’s in who controls the computational bottleneck.

Given the above finest practices on how to supply the model its context, and the prompt engineering methods that the authors advised have optimistic outcomes on result. The unique GPT-four was rumored to have round 1.7T params. From 1 and 2, it's best to now have a hosted LLM mannequin operating. By incorporating 20 million Chinese multiple-choice questions, DeepSeek LLM 7B Chat demonstrates improved scores in MMLU, C-Eval, and CMMLU. If we select to compete we are able to nonetheless win, and, if we do, we could have a Chinese company to thank. We might, for very logical causes, double down on defensive measures, like massively expanding the chip ban and imposing a permission-based regulatory regime on chips and semiconductor gear that mirrors the E.U.’s approach to tech; alternatively, we might realize that we've actual competition, and truly give ourself permission to compete. I imply, it isn't like they found a automobile.

For more regarding deep seek have a look at our own web site.

댓글목록

등록된 댓글이 없습니다.