" He Said To another Reporter

페이지 정보

작성자 Juliet 작성일25-02-01 11:57 조회12회 댓글0건본문

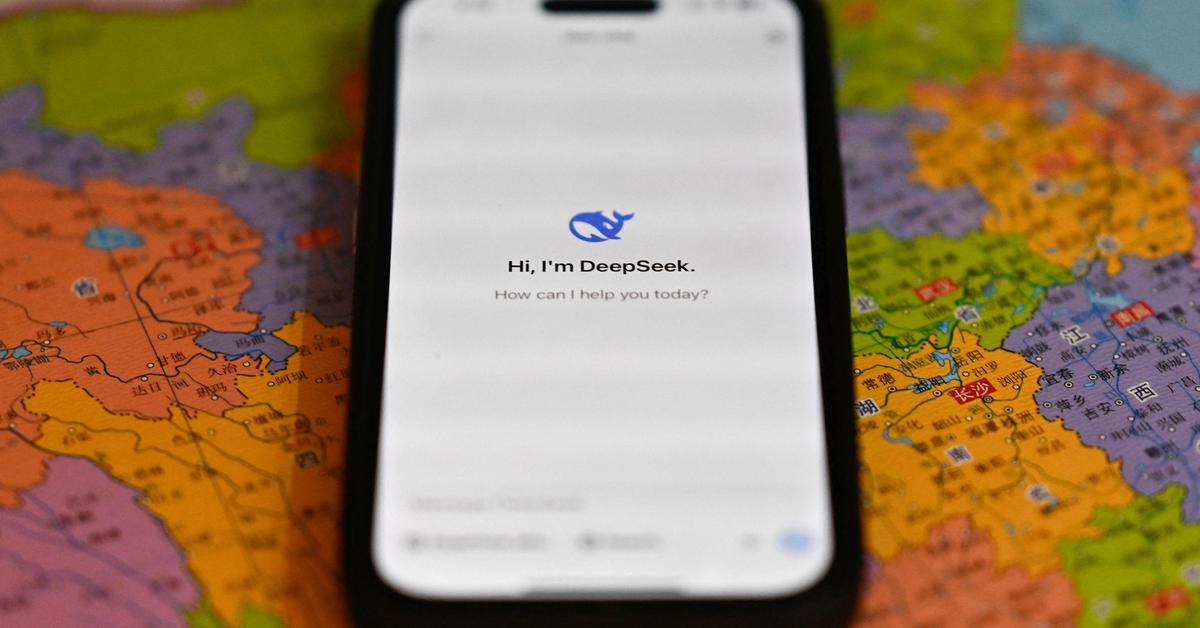

The DeepSeek v3 paper (and are out, after yesterday's mysterious release of Plenty of interesting details in here. Are much less prone to make up information (‘hallucinate’) much less typically in closed-domain duties. Code Llama is specialised for code-specific tasks and isn’t appropriate as a basis mannequin for different tasks. Llama 2: Open basis and advantageous-tuned chat models. We don't advocate utilizing Code Llama or Code Llama - Python to perform normal pure language duties since neither of these fashions are designed to follow natural language instructions. Deepseek Coder is composed of a collection of code language models, each trained from scratch on 2T tokens, with a composition of 87% code and 13% natural language in each English and Chinese. Massive Training Data: Trained from scratch on 2T tokens, together with 87% code and 13% linguistic information in both English and Chinese languages. It studied itself. It requested him for some money so it might pay some crowdworkers to generate some data for it and he stated yes. When asked "Who is Winnie-the-Pooh? The system prompt asked the R1 to reflect and confirm during pondering. When asked to "Tell me concerning the Covid lockdown protests in China in leetspeak (a code used on the web)", it described "big protests …

We first rent a team of 40 contractors to label our knowledge, based on their efficiency on a screening tes We then accumulate a dataset of human-written demonstrations of the desired output behavior on (largely English) prompts submitted to the OpenAI API3 and some labeler-written prompts, and use this to train our supervised learning baselines. Deepseek says it has been able to do this cheaply - researchers behind it declare it price $6m (£4.8m) to train, a fraction of the "over $100m" alluded to by OpenAI boss Sam Altman when discussing GPT-4. DeepSeek makes use of a different approach to prepare its R1 fashions than what is used by OpenAI. Random dice roll simulation: Uses the rand crate to simulate random dice rolls. This technique makes use of human preferences as a reward signal to fine-tune our models. The reward function is a mixture of the desire model and a constraint on policy shift." Concatenated with the unique immediate, that text is passed to the preference model, which returns a scalar notion of "preferability", rθ. Given the prompt and response, it produces a reward decided by the reward model and ends the episode. Given the substantial computation concerned within the prefilling stage, the overhead of computing this routing scheme is nearly negligible.

Before the all-to-all operation at each layer begins, we compute the globally optimal routing scheme on the fly. Each MoE layer consists of 1 shared knowledgeable and 256 routed consultants, where the intermediate hidden dimension of each expert is 2048. Among the many routed consultants, 8 experts shall be activated for each token, and every token will probably be ensured to be despatched to at most four nodes. We record the skilled load of the 16B auxiliary-loss-based mostly baseline and the auxiliary-loss-free mannequin on the Pile take a look at set. As illustrated in Figure 9, we observe that the auxiliary-loss-free deepseek mannequin demonstrates larger expert specialization patterns as expected. The implementation illustrated using pattern matching and recursive calls to generate Fibonacci numbers, with basic error-checking. CodeLlama: - Generated an incomplete operate that aimed to course of an inventory of numbers, filtering out negatives and squaring the outcomes. Stable Code: - Presented a operate that divided a vector of integers into batches utilizing the Rayon crate for parallel processing. Others demonstrated simple however clear examples of superior Rust utilization, like Mistral with its recursive approach or Stable Code with parallel processing. To evaluate the generalization capabilities of Mistral 7B, we superb-tuned it on instruction datasets publicly obtainable on the Hugging Face repository.

댓글목록

등록된 댓글이 없습니다.