Most Individuals Won't Ever Be Great At Deepseek. Read Why

페이지 정보

작성자 Kia 작성일25-02-03 12:08 조회4회 댓글0건본문

It's also instructive to look on the chips DeepSeek is currently reported to have. US export controls have severely curtailed the power of Chinese tech firms to compete on AI in the Western approach-that's, infinitely scaling up by shopping for extra chips and training for an extended time period. Consequently, most Chinese corporations have centered on downstream purposes slightly than constructing their very own models. In hindsight, we should always have devoted more time to manually checking the outputs of our pipeline, fairly than rushing ahead to conduct our investigations using Binoculars. Therefore, we conduct an experiment the place all tensors related to Dgrad are quantized on a block-clever basis. Specifically, block-clever quantization of activation gradients leads to model divergence on an MoE model comprising roughly 16B total parameters, skilled for around 300B tokens. A simple strategy is to apply block-clever quantization per 128x128 parts like the way in which we quantize the model weights. We present the training curves in Figure 10 and exhibit that the relative error remains beneath 0.25% with our excessive-precision accumulation and fantastic-grained quantization methods. Although our tile-clever superb-grained quantization successfully mitigates the error launched by characteristic outliers, it requires different groupings for activation quantization, i.e., 1x128 in ahead pass and 128x1 for backward move.

Smoothquant: Accurate and environment friendly publish-training quantization for giant language models. Instruction-following analysis for big language models. CLUE: A chinese language language understanding evaluation benchmark. Evaluation details are right here. Check out the GitHub repository here. There are at the moment open issues on GitHub with CodeGPT which can have mounted the problem now. So, you've got some number of threads operating simulations in parallel and each of them is queuing up evaluations which themselves are evaluated in parallel by a separate threadpool. You need to get the output "Ollama is running". Massive Training Data: Trained from scratch on 2T tokens, together with 87% code and 13% linguistic data in both English and Chinese languages. For years, High-Flyer had been stockpiling GPUs and constructing Fire-Flyer supercomputers to investigate monetary information. For reference, this degree of functionality is alleged to require clusters of closer to 16K GPUs, the ones being introduced up immediately are extra round 100K GPUs.

Smoothquant: Accurate and environment friendly publish-training quantization for giant language models. Instruction-following analysis for big language models. CLUE: A chinese language language understanding evaluation benchmark. Evaluation details are right here. Check out the GitHub repository here. There are at the moment open issues on GitHub with CodeGPT which can have mounted the problem now. So, you've got some number of threads operating simulations in parallel and each of them is queuing up evaluations which themselves are evaluated in parallel by a separate threadpool. You need to get the output "Ollama is running". Massive Training Data: Trained from scratch on 2T tokens, together with 87% code and 13% linguistic data in both English and Chinese languages. For years, High-Flyer had been stockpiling GPUs and constructing Fire-Flyer supercomputers to investigate monetary information. For reference, this degree of functionality is alleged to require clusters of closer to 16K GPUs, the ones being introduced up immediately are extra round 100K GPUs.

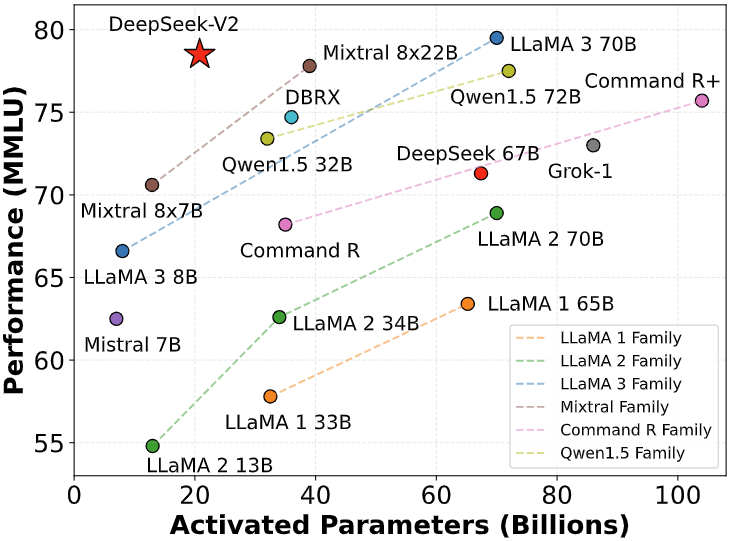

Within every role, authors are listed alphabetically by the primary title. Founded in 2015, the hedge fund rapidly rose to prominence in China, changing into the primary quant hedge fund to raise over 100 billion RMB (round $15 billion). On January 20, DeepSeek, a relatively unknown AI research lab from China, launched an open source mannequin that’s shortly turn out to be the speak of the town in Silicon Valley. DeepSeek, in contrast, embraces open source, allowing anybody to peek below the hood and contribute to its development. Llama 2: Open basis and positive-tuned chat models. LLaMA: Open and environment friendly basis language fashions. AGIEval: A human-centric benchmark for evaluating basis fashions. Mmlu-pro: A extra strong and challenging multi-job language understanding benchmark. Researchers with University College London, Ideas NCBR, the University of Oxford, New York University, and Anthropic have constructed BALGOG, a benchmark for visual language models that assessments out their intelligence by seeing how well they do on a set of textual content-journey video games. "Unlike many Chinese AI companies that rely heavily on entry to superior hardware, free deepseek has targeted on maximizing software program-pushed resource optimization," explains Marina Zhang, an associate professor at the University of Technology Sydney, who research Chinese innovations. As per benchmarks, 7B and 67B DeepSeek Chat variants have recorded sturdy efficiency in coding, mathematics and Chinese comprehension.

Points 2 and 3 are mainly about my financial assets that I haven't got obtainable at the moment. deepseek (click the up coming webpage)-V3 was truly the real innovation and what should have made people take notice a month in the past (we definitely did). Xia et al. (2023) H. Xia, T. Ge, P. Wang, S. Chen, F. Wei, and Z. Sui. Xia et al. (2024) C. S. Xia, Y. Deng, S. Dunn, and L. Zhang. Ottinger, Lily (9 December 2024). "free deepseek: From Hedge Fund to Frontier Model Maker". Liang has turn out to be the Sam Altman of China - an evangelist for AI know-how and funding in new analysis. DeepSeek’s success points to an unintended consequence of the tech chilly warfare between the US and China. It was shortly dubbed the "Pinduoduo of AI", and different major tech giants equivalent to ByteDance, Tencent, Baidu, and Alibaba began to chop the value of their AI fashions to compete with the corporate.

Points 2 and 3 are mainly about my financial assets that I haven't got obtainable at the moment. deepseek (click the up coming webpage)-V3 was truly the real innovation and what should have made people take notice a month in the past (we definitely did). Xia et al. (2023) H. Xia, T. Ge, P. Wang, S. Chen, F. Wei, and Z. Sui. Xia et al. (2024) C. S. Xia, Y. Deng, S. Dunn, and L. Zhang. Ottinger, Lily (9 December 2024). "free deepseek: From Hedge Fund to Frontier Model Maker". Liang has turn out to be the Sam Altman of China - an evangelist for AI know-how and funding in new analysis. DeepSeek’s success points to an unintended consequence of the tech chilly warfare between the US and China. It was shortly dubbed the "Pinduoduo of AI", and different major tech giants equivalent to ByteDance, Tencent, Baidu, and Alibaba began to chop the value of their AI fashions to compete with the corporate.

댓글목록

등록된 댓글이 없습니다.