Technique For Maximizing Deepseek Ai

페이지 정보

작성자 Palma Sandlin 작성일25-02-04 15:16 조회5회 댓글0건본문

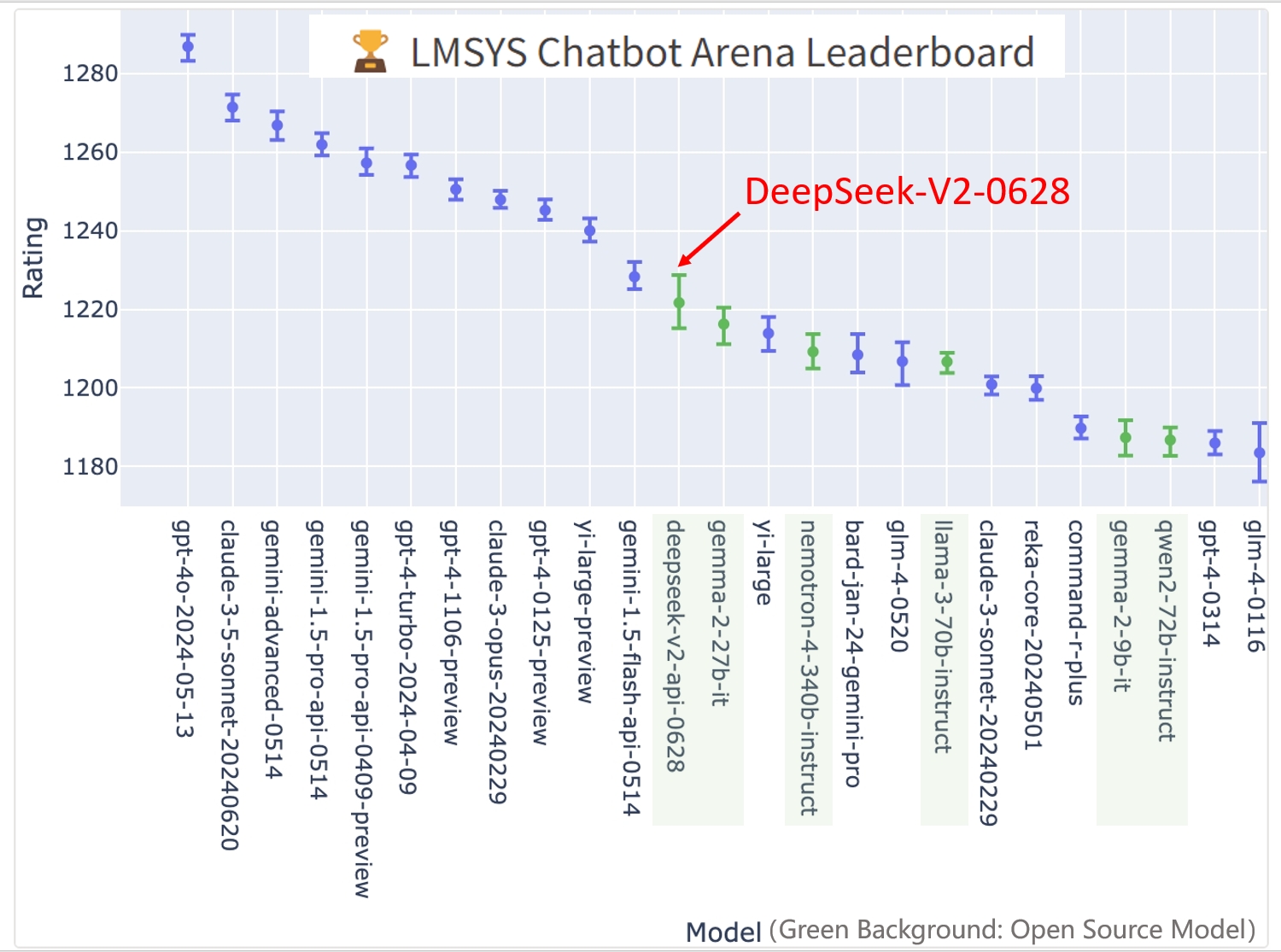

What happens now that that’s stopped for US users? But what occurs if the CCP stands pat? Here, we see a clear separation between Binoculars scores for human and AI-written code for all token lengths, with the anticipated result of the human-written code having a higher rating than the AI-written. Will probably be extra telling to see how long DeepSeek holds its top position over time. The AUC values have improved in comparison with our first try, indicating only a restricted quantity of surrounding code that ought to be added, but extra research is required to identify this threshold. Next, we set out to investigate whether or not utilizing different LLMs to write down code would end in differences in Binoculars scores. Before we may begin using Binoculars, we wanted to create a sizeable dataset of human and AI-written code, that contained samples of varied tokens lengths. Due to this difference in scores between human and AI-written text, classification might be carried out by choosing a threshold, and categorising textual content which falls above or beneath the threshold as human or AI-written respectively. However, above 200 tokens, the other is true. DeepSeek Chat has two variants of 7B and Deep Seek ai 67B parameters, that are educated on a dataset of 2 trillion tokens, says the maker.

What happens now that that’s stopped for US users? But what occurs if the CCP stands pat? Here, we see a clear separation between Binoculars scores for human and AI-written code for all token lengths, with the anticipated result of the human-written code having a higher rating than the AI-written. Will probably be extra telling to see how long DeepSeek holds its top position over time. The AUC values have improved in comparison with our first try, indicating only a restricted quantity of surrounding code that ought to be added, but extra research is required to identify this threshold. Next, we set out to investigate whether or not utilizing different LLMs to write down code would end in differences in Binoculars scores. Before we may begin using Binoculars, we wanted to create a sizeable dataset of human and AI-written code, that contained samples of varied tokens lengths. Due to this difference in scores between human and AI-written text, classification might be carried out by choosing a threshold, and categorising textual content which falls above or beneath the threshold as human or AI-written respectively. However, above 200 tokens, the other is true. DeepSeek Chat has two variants of 7B and Deep Seek ai 67B parameters, that are educated on a dataset of 2 trillion tokens, says the maker.

DeepSeek additionally says that it developed the chatbot for less than $5.6 million, which if true is far lower than the hundreds of thousands and thousands of dollars spent by U.S. Lyu Hongwei, a 38-yr-old entrepreneur from north China’s Hebei Province, has launched three stores on Alibaba International, every producing over a hundred million yuan (13.7 million U.S. Its CEO Liang Wenfeng previously co-founded one in every of China’s top hedge funds, High-Flyer, which focuses on AI-driven quantitative buying and selling. If there’s one thing that Jaya Jagadish is keen to remind me of, it’s that advanced AI and data center technology aren’t just lofty concepts anymore - they’re … One was in German, and the opposite in Latin. As evidenced by our experiences, bad high quality data can produce results which lead you to make incorrect conclusions. Empirical results show that ML-Agent, constructed upon GPT-4, leads to additional improvements. Results may vary, but imagery offered by the company reveals serviceable pictures produced by the system. There is a risk that Chinese rules impact politically sensitive content material, which can lead to biases in some information. And obviously you might have heard that export controls is in the information recently.

DeepSeek additionally says that it developed the chatbot for less than $5.6 million, which if true is far lower than the hundreds of thousands and thousands of dollars spent by U.S. Lyu Hongwei, a 38-yr-old entrepreneur from north China’s Hebei Province, has launched three stores on Alibaba International, every producing over a hundred million yuan (13.7 million U.S. Its CEO Liang Wenfeng previously co-founded one in every of China’s top hedge funds, High-Flyer, which focuses on AI-driven quantitative buying and selling. If there’s one thing that Jaya Jagadish is keen to remind me of, it’s that advanced AI and data center technology aren’t just lofty concepts anymore - they’re … One was in German, and the opposite in Latin. As evidenced by our experiences, bad high quality data can produce results which lead you to make incorrect conclusions. Empirical results show that ML-Agent, constructed upon GPT-4, leads to additional improvements. Results may vary, but imagery offered by the company reveals serviceable pictures produced by the system. There is a risk that Chinese rules impact politically sensitive content material, which can lead to biases in some information. And obviously you might have heard that export controls is in the information recently.

Code LLMs have emerged as a specialised research discipline, with remarkable studies dedicated to enhancing model's coding capabilities by superb-tuning on pre-educated fashions. We had additionally identified that using LLMs to extract functions wasn’t notably dependable, so we modified our approach for extracting capabilities to use tree-sitter, a code parsing software which might programmatically extract features from a file. Unslow finetuning for AI and LLMs. Did the upstart Chinese tech firm DeepSeek copy ChatGPT to make the artificial intelligence know-how that shook Wall Street this week? The flexibility to make cutting edge AI isn't restricted to a select cohort of the San Francisco in-group. To research this, we examined three completely different sized fashions, namely DeepSeek Coder 1.3B, IBM Granite 3B and CodeLlama 7B using datasets containing Python and JavaScript code. On the Concerns of Developers When Using GitHub Copilot This is an fascinating new paper. In the same method, Chinese AI developers use them to ensure their brokers toe the Communist social gathering line. Reports counsel the Cyberspace Administration of China (CAC) is imposing a strict auditing process that has chatbot developers ready months and adjusting their models multiple times earlier than being given the all-clear to release them to be used.

Cody is an AI coding assistant that gives autocomplete options, meant to considerably speed up the coding process. There are three camps here: 1) The Sr. managers who haven't any clue about AI coding assistants but assume they will "remove some s/w engineers and cut back costs with AI" 2) Some previous guard coding veterans who say "AI will never exchange my coding abilities I acquired in 20 years" and 3) Some enthusiastic engineers who are embracing AI for completely every thing: "AI will empower my profession… I believe the reply is pretty clearly "maybe not, but within the ballpark". Вообще, откуда такая истерика - непонятно, рассказы про то, что deepseek превосходит топовые модели - это же чистый маркетинг. А если посчитать всё сразу, то получится, что DeepSeek вложил в обучение модели вполне сравнимо с вложениями фейсбук в LLama. Да, пока главное достижение DeepSeek - очень дешевый инференс модели. Open-sourcing the brand new LLM for public analysis, DeepSeek site AI proved that their DeepSeek site Chat is significantly better than Meta’s Llama 2-70B in various fields. It lists as high-1 LLM on Bigcode Leardboard in terms of win-price, the official outcome is going to be revealed later.

댓글목록

등록된 댓글이 없습니다.