Methods to Make Your Deepseek Ai Look Amazing In 5 Days

페이지 정보

작성자 Tami 작성일25-02-06 08:20 조회4회 댓글1건본문

Study author Stig Hebbelstrup Rye Rasmussen of Arhus University and his colleagues explored if computational neural networks - algorithms that mimic the construction and function of human brains - can predict a person’s political ideology based on a single photo alone. "Same immediate. Same every part," the writer writes. DeepSeek's claim that its model was made at a fraction of the cost of its rivals has rocked the AI trade. A better variety of specialists permits scaling up to bigger fashions with out rising computational price. Over the previous yr, Mixture of Experts (MoE) fashions have surged in popularity, fueled by powerful open-supply fashions like DBRX, Mixtral, DeepSeek, and plenty of more. Compared to dense fashions, MoEs present more environment friendly training for a given compute budget. Assuming we will do nothing to stop the proliferation of extremely capable fashions, the very best path ahead is to use them. Each transformer block incorporates an consideration block and a dense feed ahead community (Figure 1, Subfigure B). These transformer blocks are stacked such that the output of one transformer block leads to the input of the following block.

Study author Stig Hebbelstrup Rye Rasmussen of Arhus University and his colleagues explored if computational neural networks - algorithms that mimic the construction and function of human brains - can predict a person’s political ideology based on a single photo alone. "Same immediate. Same every part," the writer writes. DeepSeek's claim that its model was made at a fraction of the cost of its rivals has rocked the AI trade. A better variety of specialists permits scaling up to bigger fashions with out rising computational price. Over the previous yr, Mixture of Experts (MoE) fashions have surged in popularity, fueled by powerful open-supply fashions like DBRX, Mixtral, DeepSeek, and plenty of more. Compared to dense fashions, MoEs present more environment friendly training for a given compute budget. Assuming we will do nothing to stop the proliferation of extremely capable fashions, the very best path ahead is to use them. Each transformer block incorporates an consideration block and a dense feed ahead community (Figure 1, Subfigure B). These transformer blocks are stacked such that the output of one transformer block leads to the input of the following block.

The ultimate output goes by a fully linked layer and softmax to acquire probabilities for the subsequent token to output. The router outputs are then used to weigh professional outputs to offer the ultimate output of the MoE layer. Hoffman mentioned that while DeepSeek would possibly encourage American corporations to select up the pace and share their plans sooner, the brand new revelations don't recommend that massive models are a bad funding. Similarly, when selecting top okay, a lower high ok throughout training ends in smaller matrix multiplications, leaving free computation on the desk if communication prices are massive sufficient. The gating community, sometimes a linear feed ahead community, takes in every token and produces a set of weights that decide which tokens are routed to which consultants. The sparsity in MoEs that permits for higher computational effectivity comes from the truth that a specific token will solely be routed to a subset of experts. The analysis neighborhood and the stock market will want some time to regulate to this new actuality. Today has seen hundreds of thousands of dollars wiped off US market tech stocks by the launch of DeepSeek, the most recent Chinese AI that threatens US dominance in the sector. It rapidly overtook OpenAI's ChatGPT as the most-downloaded free iOS app in the US, and brought about chip-making firm Nvidia to lose virtually $600bn (£483bn) of its market value in someday - a brand new US stock market file.

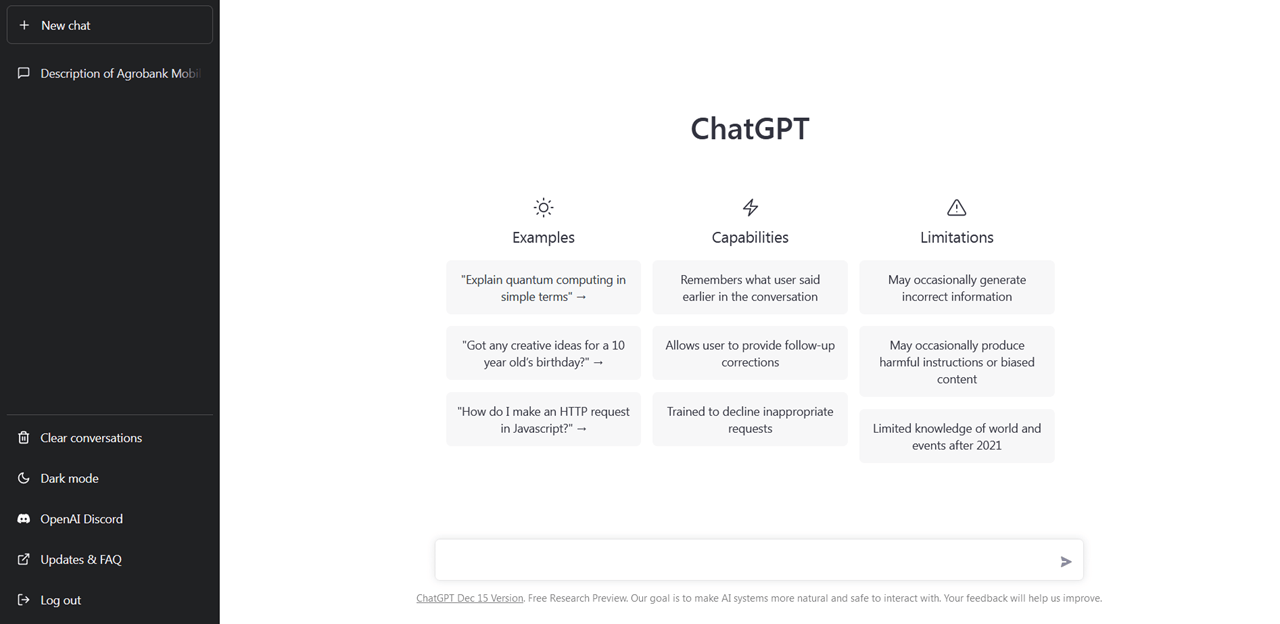

Regardless of how this plays out in the approaching days and weeks, one factor is certain: DeepSeek, in a number of brief weeks, has singlehandedly shifted the course of AI development. Clearly people want to try it out too, DeepSeek is currently topping the Apple AppStore downloads chart, forward of ChatGPT. DeepSeek-V2는 위에서 설명한 혁신적인 MoE 기법과 더불어 DeepSeek 연구진이 고안한 MLA (Multi-Head Latent Attention)라는 구조를 결합한 트랜스포머 아키텍처를 사용하는 최첨단 언어 모델입니다. A MoE mannequin is a model architecture that makes use of multiple knowledgeable networks to make predictions. The essential level the researchers make is that if policymakers transfer in direction of more punitive legal responsibility schemes for certain harms of AI (e.g, misaligned agents, or things being misused for cyberattacks), then that would kickstart quite a lot of priceless innovation in the insurance coverage industry. I've seen a reddit submit stating that the mannequin generally thinks it's ChatGPT, does anybody right here know what to make of that?

Billionaire and Silicon Valley venture capitalist Marc Andreessen describes the most recent model as 'AI's Sputnik moment' in a publish on X -- referring to the chilly war crisis sparked by USSR's launch of a satellite ahead of the US. Previously little-identified Chinese startup DeepSeek has dominated headlines and ما هو ديب سيك app charts in latest days thanks to its new AI chatbot, which sparked a worldwide tech sell-off that wiped billions off Silicon Valley’s biggest companies and shattered assumptions of America’s dominance of the tech race. In December 2024, OpenAI launched several significant options as a part of its "12 Days of OpenAI" event, which started on December 5. It announced Sora, a text-to-video model meant to create reasonable movies from textual content prompts, and out there to ChatGPT Plus and Pro customers. Google unveils invisible ‘watermark’ for AI-generated textual content. To alleviate this downside, a load balancing loss is launched that encourages even routing to all consultants.

Billionaire and Silicon Valley venture capitalist Marc Andreessen describes the most recent model as 'AI's Sputnik moment' in a publish on X -- referring to the chilly war crisis sparked by USSR's launch of a satellite ahead of the US. Previously little-identified Chinese startup DeepSeek has dominated headlines and ما هو ديب سيك app charts in latest days thanks to its new AI chatbot, which sparked a worldwide tech sell-off that wiped billions off Silicon Valley’s biggest companies and shattered assumptions of America’s dominance of the tech race. In December 2024, OpenAI launched several significant options as a part of its "12 Days of OpenAI" event, which started on December 5. It announced Sora, a text-to-video model meant to create reasonable movies from textual content prompts, and out there to ChatGPT Plus and Pro customers. Google unveils invisible ‘watermark’ for AI-generated textual content. To alleviate this downside, a load balancing loss is launched that encourages even routing to all consultants.

Here's more information in regards to ديب سيك visit our own web site.

댓글목록

AaronTop님의 댓글

AaronTop 작성일

[url=https://midnight.im]