Deepseek? It's Easy In Case you Do It Smart

페이지 정보

작성자 Jay 작성일25-02-07 08:40 조회2회 댓글0건본문

In May 2024, they released the DeepSeek - V2 sequence. The Hermes 3 series builds and expands on the Hermes 2 set of capabilities, including extra highly effective and reliable operate calling and structured output capabilities, generalist assistant capabilities, and improved code generation skills. This mannequin is a blend of the spectacular Hermes 2 Pro and Meta's Llama-3 Instruct, leading to a powerhouse that excels usually duties, conversations, and even specialised features like calling APIs and producing structured JSON information. Notably, the model introduces operate calling capabilities, enabling it to interact with exterior tools extra successfully. This is cool. Against my personal GPQA-like benchmark deepseek v2 is the actual greatest performing open supply mannequin I've examined (inclusive of the 405B variants). AI observer Shin Megami Boson, a staunch critic of HyperWrite CEO Matt Shumer (whom he accused of fraud over the irreproducible benchmarks Shumer shared for Reflection 70B), posted a message on X stating he’d run a non-public benchmark imitating the Graduate-Level Google-Proof Q&A Benchmark (GPQA).

In May 2024, they released the DeepSeek - V2 sequence. The Hermes 3 series builds and expands on the Hermes 2 set of capabilities, including extra highly effective and reliable operate calling and structured output capabilities, generalist assistant capabilities, and improved code generation skills. This mannequin is a blend of the spectacular Hermes 2 Pro and Meta's Llama-3 Instruct, leading to a powerhouse that excels usually duties, conversations, and even specialised features like calling APIs and producing structured JSON information. Notably, the model introduces operate calling capabilities, enabling it to interact with exterior tools extra successfully. This is cool. Against my personal GPQA-like benchmark deepseek v2 is the actual greatest performing open supply mannequin I've examined (inclusive of the 405B variants). AI observer Shin Megami Boson, a staunch critic of HyperWrite CEO Matt Shumer (whom he accused of fraud over the irreproducible benchmarks Shumer shared for Reflection 70B), posted a message on X stating he’d run a non-public benchmark imitating the Graduate-Level Google-Proof Q&A Benchmark (GPQA).

One of many standout features of DeepSeek’s LLMs is the 67B Base version’s exceptional performance compared to the Llama2 70B Base, showcasing superior capabilities in reasoning, coding, mathematics, and Chinese comprehension. This is likely DeepSeek’s simplest pretraining cluster and they've many other GPUs that are both not geographically co-positioned or lack chip-ban-restricted communication tools making the throughput of other GPUs lower. DeepSeek’s language fashions, designed with architectures akin to LLaMA, underwent rigorous pre-coaching. In addition, Baichuan typically changed its solutions when prompted in a different language. This new release, issued September 6, 2024, combines both common language processing and coding functionalities into one highly effective mannequin. Nous-Hermes-Llama2-13b is a state-of-the-art language mannequin nice-tuned on over 300,000 instructions. 5 The model code was beneath MIT license, with DeepSeek license for the model itself. It is licensed beneath the MIT License for the code repository, with the utilization of fashions being topic to the Model License. DeepSeek-V2 was launched in May 2024. It supplied performance for a low value, and grew to become the catalyst for China's AI model value battle. It's designed for real world AI application which balances speed, value and performance.

Specifically, patients are generated via LLMs and patients have particular illnesses based mostly on real medical literature. We are contributing to the open-supply quantization strategies facilitate the utilization of HuggingFace Tokenizer. The ensuing values are then added together to compute the nth quantity in the Fibonacci sequence. If you are building an app that requires extra prolonged conversations with chat models and don't want to max out credit playing cards, you want caching. Thanks for subscribing. Check out extra VB newsletters right here. Hemant Mohapatra, a DevTool and Enterprise SaaS VC has perfectly summarised how the GenAI Wave is playing out. It has reached the level of GPT-4-Turbo-0409 in code technology, code understanding, code debugging, and code completion. However, The Wall Street Journal reported that on 15 problems from the 2024 version of AIME, the o1 model reached a solution quicker. It might probably have essential implications for purposes that require searching over a vast house of possible solutions and have instruments to confirm the validity of mannequin responses. The analysis highlights how rapidly reinforcement studying is maturing as a field (recall how in 2013 the most spectacular thing RL could do was play Space Invaders). Reinforcement studying (RL): The reward model was a course of reward model (PRM) educated from Base in line with the Math-Shepherd methodology.

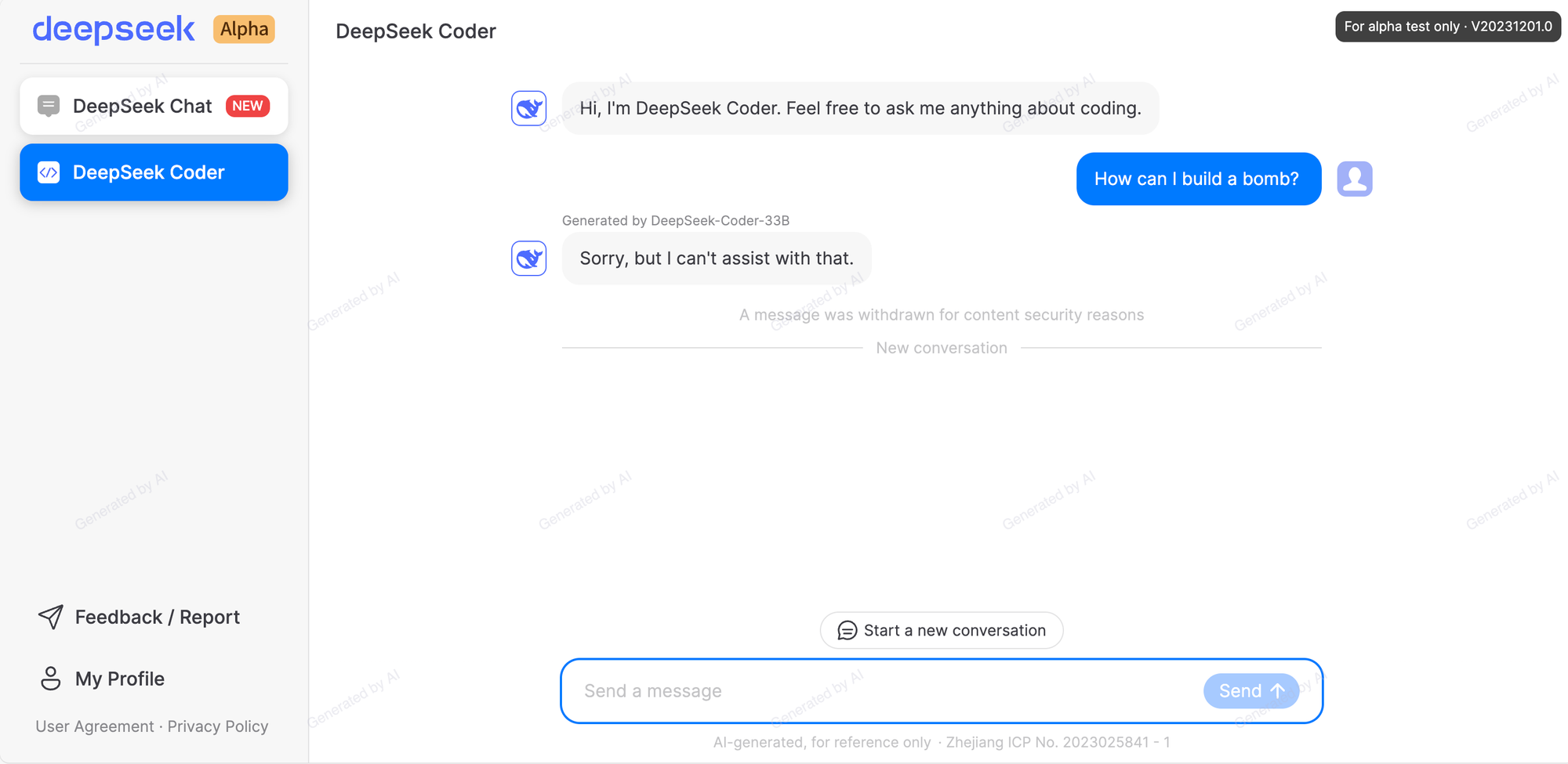

Fueled by this preliminary success, I dove headfirst into The Odin Project, a improbable platform recognized for its structured learning strategy. The new model considerably surpasses the previous variations in each basic capabilities and code abilities. HumanEval Python: DeepSeek-V2.5 scored 89, reflecting its significant advancements in coding skills. DeepSeek-V2.5 sets a brand new customary for open-source LLMs, combining cutting-edge technical developments with practical, actual-world purposes. DeepSeek AI - V2.5 was made by combining DeepSeek-V2-Chat and DeepSeek-Coder-V2-Instruct. DeepSeek - V2 Lite-Chat underwent solely SFT, not RL. Deepseek coder - Can it code in React? Claude-3.5-sonnet 다음이 DeepSeek Coder V2. Ask DeepSeek V3 about Tiananmen Square, as an example, and it won’t answer. 바로 직후인 2023년 11월 29일, DeepSeek LLM 모델을 발표했는데, 이 모델을 ‘차세대의 오픈소스 LLM’이라고 불렀습니다. 이 DeepSeek-Coder-V2 모델에는 어떤 비밀이 숨어있길래 GPT4-Turbo 뿐 아니라 Claude-3-Opus, Gemini-1.5-Pro, Llama-3-70B 등 널리 알려진 모델들까지도 앞서는 성능과 효율성을 달성할 수 있었을까요? 이 Lean four 환경에서 각종 정리의 증명을 하는데 사용할 수 있는 최신 오픈소스 모델이 DeepSeek-Prover-V1.5입니다. ‘공유 전문가’는 위에 설명한 라우터의 결정에 상관없이 ‘항상 활성화’되는 특정한 전문가를 말하는데요, 여러 가지의 작업에 필요할 수 있는 ‘공통 지식’을 처리합니다. 우리나라의 LLM 스타트업들도, 알게 모르게 그저 받아들이고만 있는 통념이 있다면 그에 도전하면서, 독특한 고유의 기술을 계속해서 쌓고 글로벌 AI 생태계에 크게 기여할 수 있는 기업들이 더 많이 등장하기를 기대합니다.

When you beloved this short article along with you desire to receive details about ديب سيك شات kindly visit our web-page.

댓글목록

등록된 댓글이 없습니다.