Which LLM Model is Best For Generating Rust Code

페이지 정보

작성자 Emelia 작성일25-02-07 10:11 조회2회 댓글0건본문

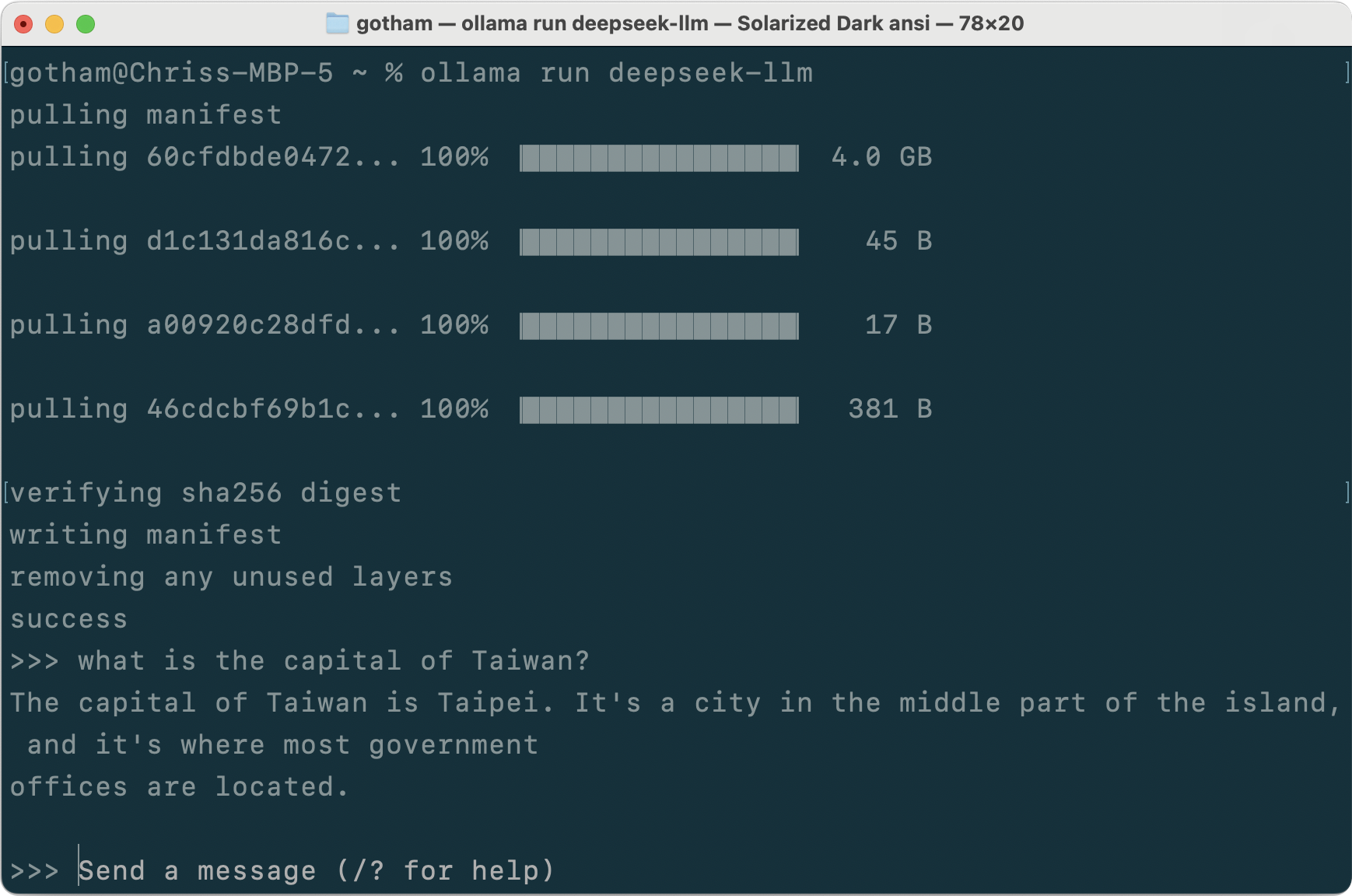

Deepseek Coder V2: - Showcased a generic operate for calculating factorials with error handling using traits and higher-order features. The mixed effect is that the consultants turn out to be specialized: Suppose two experts are both good at predicting a sure sort of enter, however one is slightly better, then the weighting perform would finally study to favor the higher one. The two V2 - Lite fashions had been smaller, and skilled similarly. One of the best model will vary however you'll be able to take a look at the Hugging Face Big Code Models leaderboard for some guidance. We ran multiple giant language fashions(LLM) domestically in order to figure out which one is the perfect at Rust programming. Etc and so forth. There may actually be no benefit to being early and each benefit to waiting for LLMs initiatives to play out. The analysis highlights how quickly reinforcement studying is maturing as a area (recall how in 2013 probably the most impressive factor RL could do was play Space Invaders).

Deepseek Coder V2: - Showcased a generic operate for calculating factorials with error handling using traits and higher-order features. The mixed effect is that the consultants turn out to be specialized: Suppose two experts are both good at predicting a sure sort of enter, however one is slightly better, then the weighting perform would finally study to favor the higher one. The two V2 - Lite fashions had been smaller, and skilled similarly. One of the best model will vary however you'll be able to take a look at the Hugging Face Big Code Models leaderboard for some guidance. We ran multiple giant language fashions(LLM) domestically in order to figure out which one is the perfect at Rust programming. Etc and so forth. There may actually be no benefit to being early and each benefit to waiting for LLMs initiatives to play out. The analysis highlights how quickly reinforcement studying is maturing as a area (recall how in 2013 probably the most impressive factor RL could do was play Space Invaders).

On January 31, US space company NASA blocked DeepSeek from its methods and the gadgets of its workers. Compressor abstract: The paper proposes a technique that uses lattice output from ASR techniques to improve SLU tasks by incorporating word confusion networks, enhancing LLM's resilience to noisy speech transcripts and robustness to various ASR efficiency situations. They claimed performance comparable to a 16B MoE as a 7B non-MoE. Open AI claimed that these new AI fashions have been utilizing the outputs of those giant AI giants to train their system, which is against the Open AI’S terms of service. Cursor, Aider all have integrated Sonnet and reported SOTA capabilities. Aider allows you to pair program with LLMs to edit code in your local git repository Start a new challenge or work with an present git repo. It's time to live a little and take a look at some of the massive-boy LLMs. For those who had AIs that behaved precisely like humans do, you’d immediately notice they were implicitly colluding on a regular basis. It nonetheless fails on duties like depend 'r' in strawberry. This development additionally touches on broader implications for power consumption in AI, as much less highly effective, but still effective, chips could lead to extra sustainable practices in tech.

In our view, using AI help for something except intelligent autocomplete is still an egregious risk. Using it as my default LM going forward (for duties that don’t contain delicate information). Please be aware that there could also be slight discrepancies when using the converted HuggingFace fashions. But this growth may not essentially be dangerous information for the likes of Nvidia in the long run: because the financial and time value of growing AI merchandise reduces, companies and governments will be able to undertake this technology more simply. Looks like we might see a reshape of AI tech in the approaching 12 months. 14k requests per day is lots, and 12k tokens per minute is considerably higher than the average individual can use on an interface like Open WebUI. My previous article went over find out how to get Open WebUI arrange with Ollama and Llama 3, nevertheless this isn’t the only approach I take advantage of Open WebUI.

I’ll go over every of them with you and given you the pros and cons of every, then I’ll show you how I arrange all three of them in my Open WebUI instance! This technique stemmed from our examine on compute-optimum inference, demonstrating that weighted majority voting with a reward model constantly outperforms naive majority voting given the same inference budget. Check beneath thread for extra discussion on same. You possibly can examine here. Try CoT here - "suppose step-by-step" or giving extra detailed prompts. "the model is prompted to alternately describe an answer step in pure language and then execute that step with code". DeepSeek’s NLP capabilities enable machines to know, interpret, and generate human language. We pre-practice DeepSeek AI-V3 on 14.Eight trillion various and excessive-quality tokens, adopted by Supervised Fine-Tuning and Reinforcement Learning stages to completely harness its capabilities. The system is proven to outperform conventional theorem proving approaches, highlighting the potential of this combined reinforcement learning and Monte-Carlo Tree Search method for advancing the field of automated theorem proving.

I’ll go over every of them with you and given you the pros and cons of every, then I’ll show you how I arrange all three of them in my Open WebUI instance! This technique stemmed from our examine on compute-optimum inference, demonstrating that weighted majority voting with a reward model constantly outperforms naive majority voting given the same inference budget. Check beneath thread for extra discussion on same. You possibly can examine here. Try CoT here - "suppose step-by-step" or giving extra detailed prompts. "the model is prompted to alternately describe an answer step in pure language and then execute that step with code". DeepSeek’s NLP capabilities enable machines to know, interpret, and generate human language. We pre-practice DeepSeek AI-V3 on 14.Eight trillion various and excessive-quality tokens, adopted by Supervised Fine-Tuning and Reinforcement Learning stages to completely harness its capabilities. The system is proven to outperform conventional theorem proving approaches, highlighting the potential of this combined reinforcement learning and Monte-Carlo Tree Search method for advancing the field of automated theorem proving.

For more information about شات DeepSeek have a look at the website.

댓글목록

등록된 댓글이 없습니다.