Why DeepSeek May very well be Good News For Energy Consumption

페이지 정보

작성자 Juanita 작성일25-02-07 10:31 조회1회 댓글0건본문

A shocking example: Deepseek R1 thinks for around seventy five seconds and successfully solves this cipher textual content problem from openai's o1 weblog post! Second, new fashions like DeepSeek's R1 and OpenAI's o1 reveal one other crucial position for compute: These "reasoning" fashions get predictably better the more time they spend pondering. You need to perceive that Tesla is in a better place than the Chinese to take advantage of recent techniques like these utilized by DeepSeek. Artificial intelligence has entered a new era of innovation, with fashions like DeepSeek-R1 setting benchmarks for performance, accessibility, and value-effectiveness. Performance on par with OpenAI-o1: DeepSeek-R1 matches or exceeds OpenAI's proprietary fashions in tasks like math, coding, and logical reasoning. This might have significant implications for fields like arithmetic, pc science, and beyond, by helping researchers and downside-solvers find options to difficult problems more efficiently. Logical Problem-Solving: The mannequin demonstrates an skill to interrupt down issues into smaller steps utilizing chain-of-thought reasoning. Many customers appreciate the model’s means to take care of context over longer conversations or code technology duties, which is essential for complicated programming challenges. 2024 has proven to be a solid yr for AI code era. It excels in producing code snippets based on consumer prompts, demonstrating its effectiveness in programming tasks.

A shocking example: Deepseek R1 thinks for around seventy five seconds and successfully solves this cipher textual content problem from openai's o1 weblog post! Second, new fashions like DeepSeek's R1 and OpenAI's o1 reveal one other crucial position for compute: These "reasoning" fashions get predictably better the more time they spend pondering. You need to perceive that Tesla is in a better place than the Chinese to take advantage of recent techniques like these utilized by DeepSeek. Artificial intelligence has entered a new era of innovation, with fashions like DeepSeek-R1 setting benchmarks for performance, accessibility, and value-effectiveness. Performance on par with OpenAI-o1: DeepSeek-R1 matches or exceeds OpenAI's proprietary fashions in tasks like math, coding, and logical reasoning. This might have significant implications for fields like arithmetic, pc science, and beyond, by helping researchers and downside-solvers find options to difficult problems more efficiently. Logical Problem-Solving: The mannequin demonstrates an skill to interrupt down issues into smaller steps utilizing chain-of-thought reasoning. Many customers appreciate the model’s means to take care of context over longer conversations or code technology duties, which is essential for complicated programming challenges. 2024 has proven to be a solid yr for AI code era. It excels in producing code snippets based on consumer prompts, demonstrating its effectiveness in programming tasks.

We’ve seen enhancements in general user satisfaction with Claude 3.5 Sonnet throughout these users, so in this month’s Sourcegraph launch we’re making it the default mannequin for chat and prompts. In this weblog, we focus on DeepSeek 2.5 and all its features, the company behind it, and examine it with GPT-4o and Claude 3.5 Sonnet. When evaluating DeepSeek 2.5 with different models corresponding to GPT-4o and Claude 3.5 Sonnet, it becomes clear that neither GPT nor Claude comes anywhere close to the price-effectiveness of DeepSeek. Feedback from customers on platforms like Reddit highlights the strengths of DeepSeek 2.5 compared to different fashions. The table below highlights its performance benchmarks. One pressure of this argumentation highlights the necessity for grounded, aim-oriented, and interactive language learning. DeepSeek-R1 employs large-scale reinforcement studying throughout publish-training to refine its reasoning capabilities. This new version enhances each common language capabilities and coding functionalities, making it nice for varied functions. DeepSeek claims to be simply as, if no more highly effective, than other language models whereas using less resources. Its largest language model so far, Step-2, has over 1 trillion parameters (GPT-4 has about 1.8 trillion).

We’ve seen enhancements in general user satisfaction with Claude 3.5 Sonnet throughout these users, so in this month’s Sourcegraph launch we’re making it the default mannequin for chat and prompts. In this weblog, we focus on DeepSeek 2.5 and all its features, the company behind it, and examine it with GPT-4o and Claude 3.5 Sonnet. When evaluating DeepSeek 2.5 with different models corresponding to GPT-4o and Claude 3.5 Sonnet, it becomes clear that neither GPT nor Claude comes anywhere close to the price-effectiveness of DeepSeek. Feedback from customers on platforms like Reddit highlights the strengths of DeepSeek 2.5 compared to different fashions. The table below highlights its performance benchmarks. One pressure of this argumentation highlights the necessity for grounded, aim-oriented, and interactive language learning. DeepSeek-R1 employs large-scale reinforcement studying throughout publish-training to refine its reasoning capabilities. This new version enhances each common language capabilities and coding functionalities, making it nice for varied functions. DeepSeek claims to be simply as, if no more highly effective, than other language models whereas using less resources. Its largest language model so far, Step-2, has over 1 trillion parameters (GPT-4 has about 1.8 trillion).

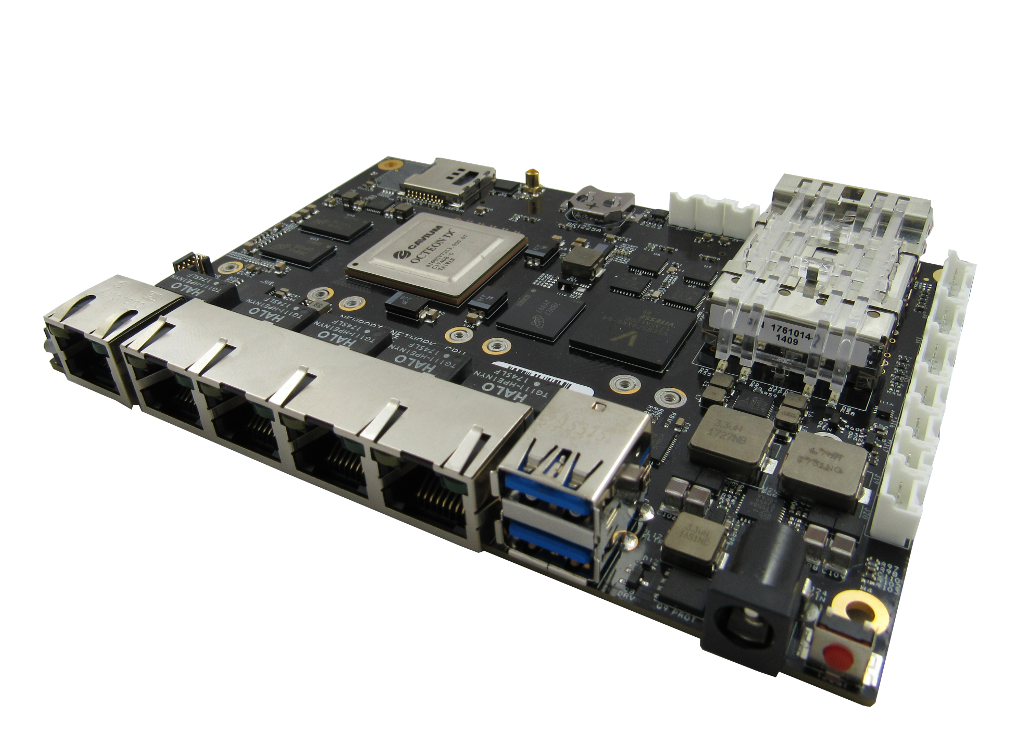

The Mixture-of-Experts (MoE) architecture allows the mannequin to activate only a subset of its parameters for each token processed. Built on an enormous architecture with a Mixture-of-Experts (MoE) approach, it achieves distinctive efficiency by activating solely a subset of its parameters per token. Minimal labeled information required: The model achieves important efficiency boosts even with limited supervised wonderful-tuning. Coding Challenges: It achieves a higher Codeforces score than OpenAI o1, making it splendid for programming-associated duties. R1's base mannequin V3 reportedly required 2.788 million hours to prepare (working throughout many graphical processing models - GPUs - at the identical time), at an estimated cost of under $6m (£4.8m), compared to the more than $100m (£80m) that OpenAI boss Sam Altman says was required to prepare GPT-4. DeepSeek-R1 uses an intelligent caching system that shops frequently used prompts and responses for a number of hours or days. Utilizing context caching for repeated prompts. The API affords cost-efficient charges whereas incorporating a caching mechanism that considerably reduces bills for repetitive queries. Compressor abstract: Powerformer is a novel transformer architecture that learns strong power system state representations by using a section-adaptive consideration mechanism and customised strategies, achieving better energy dispatch for different transmission sections. The current lead provides the United States energy and leverage, as it has higher merchandise to sell than its rivals.

At a minimal, let’s not hearth off a beginning gun to a race that we would effectively not win, even when all of humanity wasn’t very more likely to lose it, over a ‘missile gap’ model lie that we are one way or the other not presently in the lead. Here are some examples of how to make use of our model. Simplest way is to make use of a package deal supervisor like conda or uv to create a brand new virtual environment and install the dependencies. The DeepSeek-R1 API is designed for ease of use while providing strong customization choices for developers. The corporate aims to create efficient AI assistants that can be integrated into numerous purposes by way of easy API calls and a user-pleasant chat interface. Below is a step-by-step guide on how you can integrate and use the API successfully. Flexbox was so simple to use. Should you require BF16 weights for experimentation, you should utilize the offered conversion script to carry out the transformation. SGLang: Fully help the DeepSeek-V3 model in both BF16 and FP8 inference modes, with Multi-Token Prediction coming soon.

When you loved this short article as well as you desire to obtain more info with regards to ديب سيك شات i implore you to stop by our own web site.

댓글목록

등록된 댓글이 없습니다.