Do Deepseek Higher Than Barack Obama

페이지 정보

작성자 Jasmin 작성일25-02-07 11:00 조회2회 댓글0건본문

Pearl said. DeepSeek is subjected to PRC legal guidelines and anything entered into the app is honest recreation. Common observe in language modeling laboratories is to use scaling legal guidelines to de-threat ideas for pretraining, so that you simply spend very little time coaching at the most important sizes that do not lead to working models. Their coaching algorithm and strategy could assist mitigate the associated fee. Personal Assistant: Future LLMs would possibly have the ability to handle your schedule, remind you of necessary occasions, and even make it easier to make decisions by providing useful info. The United States thought it might sanction its approach to dominance in a key know-how it believes will help bolster its national safety. Going forward, AI’s greatest proponents imagine artificial intelligence (and finally AGI and superintelligence) will change the world, paving the way in which for profound developments in healthcare, education, scientific discovery and much more. R1 can also be a much more compact mannequin, requiring less computational energy, but it's skilled in a means that allows it to match and even exceed the performance of a lot bigger fashions. Producing methodical, cutting-edge analysis like this takes a ton of work - purchasing a subscription would go a long way toward a deep, meaningful understanding of AI developments in China as they occur in real time.

Pearl said. DeepSeek is subjected to PRC legal guidelines and anything entered into the app is honest recreation. Common observe in language modeling laboratories is to use scaling legal guidelines to de-threat ideas for pretraining, so that you simply spend very little time coaching at the most important sizes that do not lead to working models. Their coaching algorithm and strategy could assist mitigate the associated fee. Personal Assistant: Future LLMs would possibly have the ability to handle your schedule, remind you of necessary occasions, and even make it easier to make decisions by providing useful info. The United States thought it might sanction its approach to dominance in a key know-how it believes will help bolster its national safety. Going forward, AI’s greatest proponents imagine artificial intelligence (and finally AGI and superintelligence) will change the world, paving the way in which for profound developments in healthcare, education, scientific discovery and much more. R1 can also be a much more compact mannequin, requiring less computational energy, but it's skilled in a means that allows it to match and even exceed the performance of a lot bigger fashions. Producing methodical, cutting-edge analysis like this takes a ton of work - purchasing a subscription would go a long way toward a deep, meaningful understanding of AI developments in China as they occur in real time.

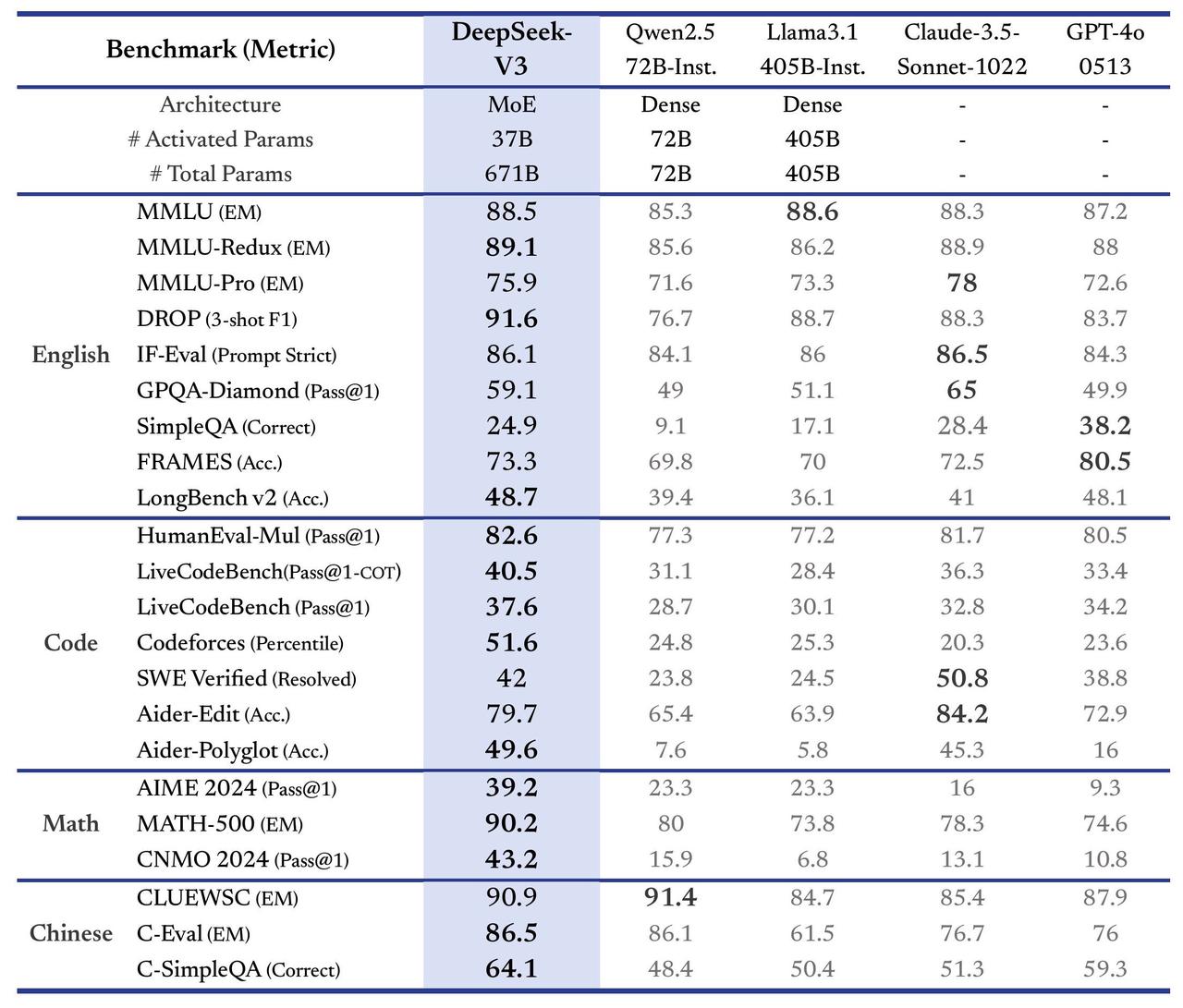

However, this may rely in your use case as they may be able to work well for specific classification duties. It carried out particularly nicely in coding and math, beating out its rivals on almost every test. DeepSeek-V3 is designed for builders and researchers looking to implement advanced natural language processing capabilities in functions comparable to chatbots, academic tools, content material generation, and coding help. The mannequin makes use of a transformer architecture, which is a sort of neural network significantly effectively-fitted to pure language processing duties. We current two variants of EC Fine-Tuning (Steinert-Threlkeld et al., 2022), one among which outperforms a backtranslation-solely baseline in all four languages investigated, including the low-useful resource language Nepali. Compressor abstract: The paper presents Raise, a brand new architecture that integrates giant language fashions into conversational agents using a dual-component reminiscence system, improving their controllability and flexibility in complex dialogues, as shown by its efficiency in an actual estate sales context.

SGLang at present helps MLA optimizations, DP Attention, FP8 (W8A8), FP8 KV Cache, and Torch Compile, delivering state-of-the-art latency and throughput efficiency amongst open-source frameworks. Support for FP8 is at the moment in progress and will be launched quickly. However, to make quicker progress for this version, we opted to use normal tooling (Maven and OpenClover for Java, gotestsum for Go, and Symflower for constant tooling and output), which we are able to then swap for better solutions in the approaching versions. Reducing the total checklist of over 180 LLMs to a manageable measurement was performed by sorting based mostly on scores and then costs. Nevertheless, if R1 has managed to do what DeepSeek says it has, then it will have a massive impact on the broader artificial intelligence trade - particularly in the United States, the place AI investment is highest. While such enhancements are anticipated in AI, this might imply DeepSeek is main on reasoning efficiency, although comparisons remain tough as a result of firms like Google have not released pricing for his or her reasoning models. While the U.S. government has tried to regulate the AI industry as a whole, it has little to no oversight over what specific AI models really generate.

McNeal, who has studied the details of Chinese government data sharing necessities for home corporations. Who is behind DeepSeek? In February 2016, High-Flyer was co-based by AI enthusiast Liang Wenfeng, who had been buying and selling for the reason that 2007-2008 financial disaster whereas attending Zhejiang University. While highly effective, it struggled with issues like repetition and readability. That being mentioned, DeepSeek’s distinctive points around privateness and censorship may make it a less appealing possibility than ChatGPT. All AI models pose a privateness threat, with the potential to leak or misuse users’ private info, however DeepSeek AI-R1 poses an even better risk. The organization encourages responsible utilization to forestall misuse or dangerous purposes of generated content material. The regulation dictates that generative AI providers must "uphold core socialist values" and prohibits content material that "subverts state authority" and "threatens or compromises national safety and interests"; it also compels AI developers to bear security evaluations and register their algorithms with the CAC earlier than public release. However, its source code and any specifics about its underlying knowledge usually are not accessible to the general public. Yes, DeepSeek is open source in that its mannequin weights and training methods are freely available for the public to look at, use and build upon. These features are more and more necessary in the context of training giant frontier AI fashions.

McNeal, who has studied the details of Chinese government data sharing necessities for home corporations. Who is behind DeepSeek? In February 2016, High-Flyer was co-based by AI enthusiast Liang Wenfeng, who had been buying and selling for the reason that 2007-2008 financial disaster whereas attending Zhejiang University. While highly effective, it struggled with issues like repetition and readability. That being mentioned, DeepSeek’s distinctive points around privateness and censorship may make it a less appealing possibility than ChatGPT. All AI models pose a privateness threat, with the potential to leak or misuse users’ private info, however DeepSeek AI-R1 poses an even better risk. The organization encourages responsible utilization to forestall misuse or dangerous purposes of generated content material. The regulation dictates that generative AI providers must "uphold core socialist values" and prohibits content material that "subverts state authority" and "threatens or compromises national safety and interests"; it also compels AI developers to bear security evaluations and register their algorithms with the CAC earlier than public release. However, its source code and any specifics about its underlying knowledge usually are not accessible to the general public. Yes, DeepSeek is open source in that its mannequin weights and training methods are freely available for the public to look at, use and build upon. These features are more and more necessary in the context of training giant frontier AI fashions.

For more information on ديب سيك take a look at the site.

댓글목록

등록된 댓글이 없습니다.