Why You really need (A) Deepseek

페이지 정보

작성자 Lilla 작성일25-02-07 11:58 조회2회 댓글0건본문

I received an intro to speak straight with a workers from Deepseek and bought the inside story. Now, you also received the most effective folks. AI chatbots take a considerable amount of vitality and sources to function, though some people may not perceive precisely how. This allows it to give answers while activating far less of its "brainpower" per query, thus saving on compute and power costs. This overlap also ensures that, because the model further scales up, so long as we maintain a relentless computation-to-communication ratio, we will nonetheless employ effective-grained experts throughout nodes whereas achieving a near-zero all-to-all communication overhead. More importantly, it overlaps the computation and communication phases throughout forward and backward processes, thereby addressing the challenge of heavy communication overhead introduced by cross-node skilled parallelism. For DeepSeek-V3, the communication overhead launched by cross-node knowledgeable parallelism results in an inefficient computation-to-communication ratio of roughly 1:1. To deal with this challenge, we design an modern pipeline parallelism algorithm referred to as DualPipe, which not only accelerates mannequin coaching by effectively overlapping ahead and backward computation-communication phases, but also reduces the pipeline bubbles. In order to ensure enough computational performance for DualPipe, we customize efficient cross-node all-to-all communication kernels (together with dispatching and combining) to conserve the number of SMs devoted to communication.

As well as, for DualPipe, neither the bubbles nor activation reminiscence will enhance because the number of micro-batches grows. In Table 2, we summarize the pipeline bubbles and memory usage across different PP methods. Compared with current PP strategies, DualPipe has fewer pipeline bubbles. Compared with Chimera (Li and Hoefler, 2021), DualPipe solely requires that the pipeline levels and micro-batches be divisible by 2, without requiring micro-batches to be divisible by pipeline phases. Compared with DeepSeek-V2, an exception is that we moreover introduce an auxiliary-loss-free load balancing technique (Wang et al., 2024a) for DeepSeekMoE to mitigate the efficiency degradation induced by the trouble to ensure load balance. DeepSeek-AI (2024a) DeepSeek-AI. Deepseek-coder-v2: Breaking the barrier of closed-source models in code intelligence. However, too large an auxiliary loss will impair the mannequin efficiency (Wang et al., 2024a). To realize a better trade-off between load steadiness and model efficiency, we pioneer an auxiliary-loss-free load balancing technique (Wang et al., 2024a) to ensure load steadiness.

As well as, for DualPipe, neither the bubbles nor activation reminiscence will enhance because the number of micro-batches grows. In Table 2, we summarize the pipeline bubbles and memory usage across different PP methods. Compared with current PP strategies, DualPipe has fewer pipeline bubbles. Compared with Chimera (Li and Hoefler, 2021), DualPipe solely requires that the pipeline levels and micro-batches be divisible by 2, without requiring micro-batches to be divisible by pipeline phases. Compared with DeepSeek-V2, an exception is that we moreover introduce an auxiliary-loss-free load balancing technique (Wang et al., 2024a) for DeepSeekMoE to mitigate the efficiency degradation induced by the trouble to ensure load balance. DeepSeek-AI (2024a) DeepSeek-AI. Deepseek-coder-v2: Breaking the barrier of closed-source models in code intelligence. However, too large an auxiliary loss will impair the mannequin efficiency (Wang et al., 2024a). To realize a better trade-off between load steadiness and model efficiency, we pioneer an auxiliary-loss-free load balancing technique (Wang et al., 2024a) to ensure load steadiness.

For every token, when its routing determination is made, it can first be transmitted through IB to the GPUs with the same in-node index on its target nodes. 2. Apply the identical GRPO RL course of as R1-Zero, including a "language consistency reward" to encourage it to reply monolingually. Unlike conventional language fashions, its MoE-based mostly structure activates only the required "professional" per process. Exploring AI Models: I explored Cloudflare's AI fashions to find one that might generate natural language instructions based mostly on a given schema. Given the environment friendly overlapping technique, the complete DualPipe scheduling is illustrated in Figure 5. It employs a bidirectional pipeline scheduling, which feeds micro-batches from both ends of the pipeline simultaneously and a big portion of communications can be absolutely overlapped. As well as, even in more basic scenarios with out a heavy communication burden, DualPipe still exhibits efficiency advantages. ARG occasions. Although DualPipe requires protecting two copies of the model parameters, this does not significantly enhance the reminiscence consumption since we use a large EP measurement during training.

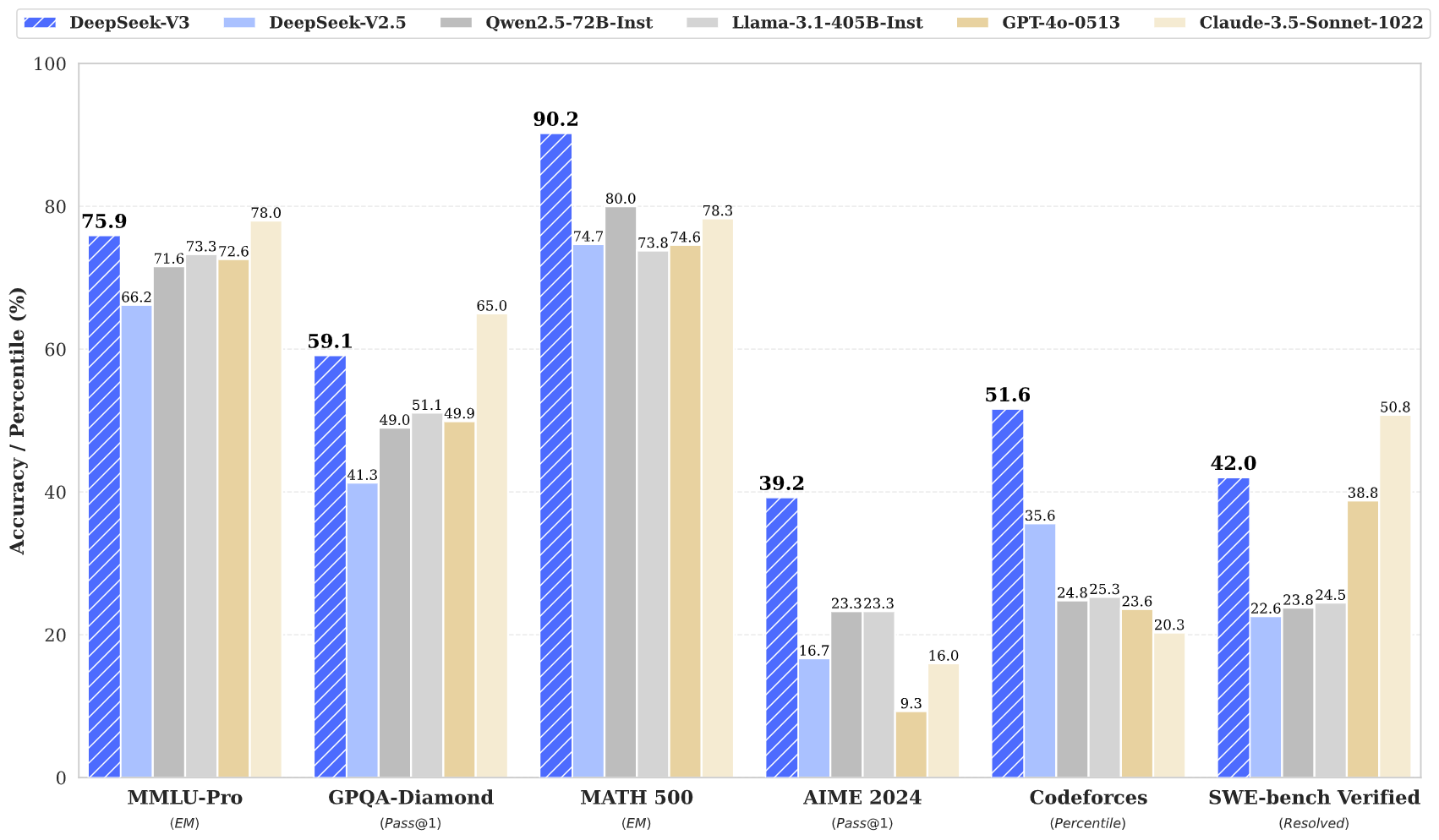

Doves concern that aggressive use of export controls will destroy the potential of productive diplomacy on AI safety. Open Source: MIT-licensed weights, 1.5B-70B distilled variants for commercial use. Initially, DeepSeek created their first mannequin with structure just like other open models like LLaMA, aiming to outperform benchmarks. Earlier this week, DeepSeek, a nicely-funded Chinese AI lab, released an "open" AI mannequin that beats many rivals on common benchmarks. The A800 SXM primarily suffers from reduced knowledge switch efficiency between GPU cards, with bandwidth decreased by 33%. As an example, in training a mannequin like GPT-three with 175 billion parameters, multiple GPUs must work collectively. Distillation: Efficient information transfer methods, compressing highly effective AI capabilities into models as small as 1.5 billion parameters. Interestingly, regardless of its large parameter depend, only 37 billion parameters are activated during most operations, similar to DeepSeek V3. DeepSeek V3 relies on a Mixture of Experts (MoE) transformer architecture, which selectively activates completely different subsets of parameters for different inputs.

If you loved this write-up and you would such as to receive even more information relating to ديب سيك شات kindly go to our web-site.

댓글목록

등록된 댓글이 없습니다.