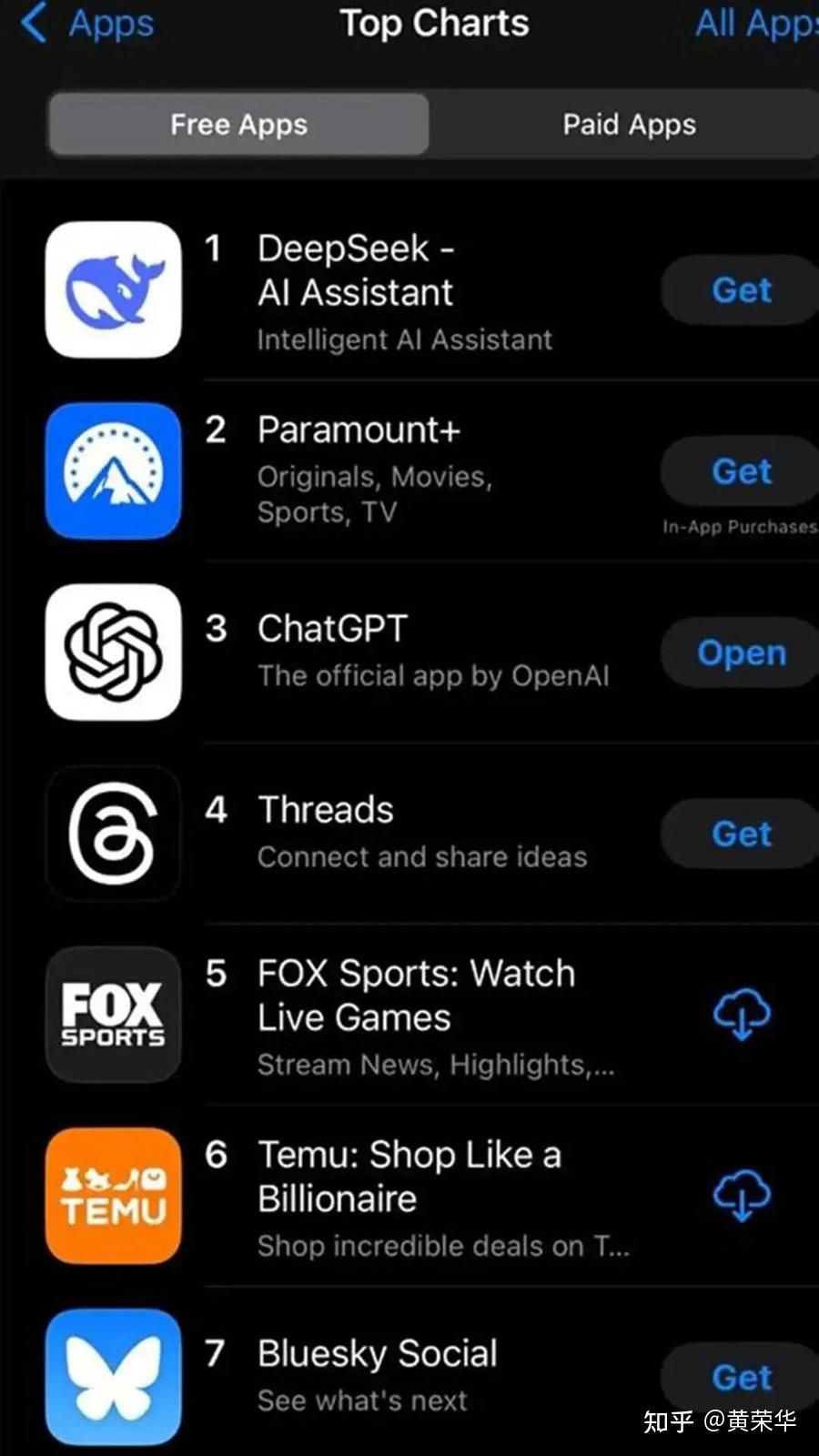

If You don't Deepseek Now, You'll Hate Yourself Later

페이지 정보

작성자 Hester 작성일25-02-13 00:20 조회4회 댓글0건본문

In addition, whereas ChatGPT focuses on creative content material generation, DeepSeek is geared towards technical evaluation. As well as, compared with DeepSeek-V2, the brand new pretokenizer introduces tokens that mix punctuations and line breaks. However, this trick might introduce the token boundary bias (Lundberg, 2023) when the model processes multi-line prompts without terminal line breaks, notably for few-shot evaluation prompts. Following our earlier work (DeepSeek-AI, 2024b, c), we undertake perplexity-based mostly analysis for datasets including HellaSwag, PIQA, WinoGrande, RACE-Middle, RACE-High, MMLU, MMLU-Redux, MMLU-Pro, MMMLU, ARC-Easy, ARC-Challenge, C-Eval, CMMLU, C3, and CCPM, and adopt generation-based analysis for TriviaQA, NaturalQuestions, DROP, MATH, GSM8K, MGSM, HumanEval, MBPP, LiveCodeBench-Base, CRUXEval, BBH, AGIEval, CLUEWSC, CMRC, and CMath. Our analysis is based on our internal evaluation framework built-in in our HAI-LLM framework. The FIM strategy is applied at a charge of 0.1, in line with the PSM framework. In alignment with DeepSeekCoder-V2, we also incorporate the FIM technique within the pre-coaching of DeepSeek-V3. Within the coaching technique of DeepSeekCoder-V2 (DeepSeek-AI, 2024a), we observe that the Fill-in-Middle (FIM) technique doesn't compromise the subsequent-token prediction capability while enabling the model to precisely predict middle textual content primarily based on contextual cues. This structure is utilized on the document stage as part of the pre-packing course of.

2024), we implement the document packing method for knowledge integrity but do not incorporate cross-pattern consideration masking during coaching. Although the dequantization overhead is considerably mitigated combined with our exact FP32 accumulation strategy, the frequent information movements between Tensor Cores and CUDA cores nonetheless limit the computational effectivity. In this way, the entire partial sum accumulation and dequantization might be accomplished straight inside Tensor Cores until the final result is produced, avoiding frequent data movements. Thus, we suggest that future chip designs increase accumulation precision in Tensor Cores to support full-precision accumulation, or select an acceptable accumulation bit-width based on the accuracy requirements of coaching and inference algorithms. With its superior algorithms and user-pleasant interface, DeepSeek is setting a new normal for information discovery and search technologies. Does DeepSeek help voice search optimization? Current GPUs only assist per-tensor quantization, missing the native support for high quality-grained quantization like our tile- and block-sensible quantization.

2024), we implement the document packing method for knowledge integrity but do not incorporate cross-pattern consideration masking during coaching. Although the dequantization overhead is considerably mitigated combined with our exact FP32 accumulation strategy, the frequent information movements between Tensor Cores and CUDA cores nonetheless limit the computational effectivity. In this way, the entire partial sum accumulation and dequantization might be accomplished straight inside Tensor Cores until the final result is produced, avoiding frequent data movements. Thus, we suggest that future chip designs increase accumulation precision in Tensor Cores to support full-precision accumulation, or select an acceptable accumulation bit-width based on the accuracy requirements of coaching and inference algorithms. With its superior algorithms and user-pleasant interface, DeepSeek is setting a new normal for information discovery and search technologies. Does DeepSeek help voice search optimization? Current GPUs only assist per-tensor quantization, missing the native support for high quality-grained quantization like our tile- and block-sensible quantization.

The present implementations battle to successfully assist on-line quantization, regardless of its effectiveness demonstrated in our analysis. In the existing course of, we need to read 128 BF16 activation values (the output of the earlier computation) from HBM (High Bandwidth Memory) for quantization, and the quantized FP8 values are then written again to HBM, only to be learn once more for MMA. During the backward pass, the matrix must be read out, dequantized, transposed, re-quantized into 128x1 tiles, and saved in HBM. In our workflow, activations throughout the forward cross are quantized into 1x128 FP8 tiles and saved. We are able to speak about speculations about what the massive model labs are doing. To handle this inefficiency, we suggest that future chips integrate FP8 solid and TMA (Tensor Memory Accelerator) entry right into a single fused operation, so quantization can be accomplished during the transfer of activations from world memory to shared memory, avoiding frequent memory reads and writes. We also suggest supporting a warp-degree cast instruction for speedup, which additional facilitates the higher fusion of layer normalization and FP8 solid. Each MoE layer consists of 1 shared professional and 256 routed experts, the place the intermediate hidden dimension of every professional is 2048. Among the many routed specialists, 8 experts shall be activated for every token, and each token shall be ensured to be despatched to at most 4 nodes.

We leverage pipeline parallelism to deploy totally different layers of a mannequin on completely different GPUs, and for every layer, the routed experts will probably be uniformly deployed on sixty four GPUs belonging to eight nodes. DeepSeek has quickly turn into a key player within the AI trade by overcoming significant challenges, reminiscent of US export controls on superior GPUs. What Does this Mean for the AI Industry at Large? If made into law, this might mean that Chinese AI apps like DeepSeek AI would not be legally accessible from U.S. This doesn’t mean that we all know for a indisputable fact that DeepSeek distilled 4o or Claude, but frankly, it could be odd if they didn’t. Besides, ensures that the AI doesn’t retailer pointless user data and makes use of anonymization methods when wanted. This method ensures that errors stay within acceptable bounds while maintaining computational efficiency. Also, our data processing pipeline is refined to attenuate redundancy whereas sustaining corpus diversity. Through this two-part extension training, DeepSeek-V3 is able to handling inputs as much as 128K in length whereas sustaining strong performance. Significantly lower training prices: DeepSeek R1’s total coaching value was solely $6 million, whereas OpenAI’s comparable fashions value hundreds of hundreds of thousands of dollars. DeepSeek-V3 is a common-purpose model, while DeepSeek-R1 focuses on reasoning duties.

We leverage pipeline parallelism to deploy totally different layers of a mannequin on completely different GPUs, and for every layer, the routed experts will probably be uniformly deployed on sixty four GPUs belonging to eight nodes. DeepSeek has quickly turn into a key player within the AI trade by overcoming significant challenges, reminiscent of US export controls on superior GPUs. What Does this Mean for the AI Industry at Large? If made into law, this might mean that Chinese AI apps like DeepSeek AI would not be legally accessible from U.S. This doesn’t mean that we all know for a indisputable fact that DeepSeek distilled 4o or Claude, but frankly, it could be odd if they didn’t. Besides, ensures that the AI doesn’t retailer pointless user data and makes use of anonymization methods when wanted. This method ensures that errors stay within acceptable bounds while maintaining computational efficiency. Also, our data processing pipeline is refined to attenuate redundancy whereas sustaining corpus diversity. Through this two-part extension training, DeepSeek-V3 is able to handling inputs as much as 128K in length whereas sustaining strong performance. Significantly lower training prices: DeepSeek R1’s total coaching value was solely $6 million, whereas OpenAI’s comparable fashions value hundreds of hundreds of thousands of dollars. DeepSeek-V3 is a common-purpose model, while DeepSeek-R1 focuses on reasoning duties.

If you adored this article and you also would like to receive more info concerning Deep Seek i implore you to visit the web site.

댓글목록

등록된 댓글이 없습니다.