The Insider Secrets Of Deepseek Discovered

페이지 정보

작성자 Doug 작성일25-02-13 12:42 조회3회 댓글0건본문

Multiple estimates put DeepSeek within the 20K (on ChinaTalk) to 50K (Dylan Patel) A100 equivalent of GPUs. Nvidia quickly made new variations of their A100 and H100 GPUs that are effectively just as capable named the A800 and H800. Lower bounds for compute are essential to understanding the progress of know-how and peak effectivity, however with out substantial compute headroom to experiment on giant-scale models DeepSeek-V3 would never have existed. You train the most succesful fashions you'll be able to, after which folks determine how to make use of them, the factor he is asking for is neither doable nor coherent on the lab stage, after which people will use it for no matter makes probably the most sense for them. U.S. investments might be both: (1) prohibited or (2) notifiable, based on whether or not they pose an acute nationwide safety threat or could contribute to a nationwide security menace to the United States, respectively. Later on within the DeepSeek-V2 sections they will make some changes that impact how this half works, and so in that section we'll cowl this in more element.

Multiple estimates put DeepSeek within the 20K (on ChinaTalk) to 50K (Dylan Patel) A100 equivalent of GPUs. Nvidia quickly made new variations of their A100 and H100 GPUs that are effectively just as capable named the A800 and H800. Lower bounds for compute are essential to understanding the progress of know-how and peak effectivity, however with out substantial compute headroom to experiment on giant-scale models DeepSeek-V3 would never have existed. You train the most succesful fashions you'll be able to, after which folks determine how to make use of them, the factor he is asking for is neither doable nor coherent on the lab stage, after which people will use it for no matter makes probably the most sense for them. U.S. investments might be both: (1) prohibited or (2) notifiable, based on whether or not they pose an acute nationwide safety threat or could contribute to a nationwide security menace to the United States, respectively. Later on within the DeepSeek-V2 sections they will make some changes that impact how this half works, and so in that section we'll cowl this in more element.

Custom multi-GPU communication protocols to make up for the slower communication velocity of the H800 and optimize pretraining throughput. It is strongly correlated with how a lot progress you or the organization you’re joining can make. Both browsers are installed with vim extensions so I can navigate much of the web with out utilizing a cursor. For the last week, I’ve been using DeepSeek V3 as my each day driver for regular chat duties. Claims of Top Performance: Alibaba’s inner benchmarks present Qwen2.5-Max edging out DeepSeek V3 in a number of tasks. This progressive model demonstrates distinctive efficiency throughout numerous benchmarks, together with arithmetic, coding, and multilingual tasks. DeepSeek is a sophisticated open-supply Large Language Model (LLM). A reasoning model is a big language mannequin advised to "think step-by-step" earlier than it provides a closing answer. 4. Model-based reward fashions have been made by starting with a SFT checkpoint of V3, then finetuning on human choice knowledge containing each remaining reward and chain-of-thought leading to the ultimate reward. She is a highly enthusiastic particular person with a eager interest in Machine learning, Data science and AI and an avid reader of the most recent developments in these fields. While NVLink pace are lower to 400GB/s, that is not restrictive for most parallelism strategies which might be employed akin to 8x Tensor Parallel, Fully Sharded Data Parallel, and Pipeline Parallelism.

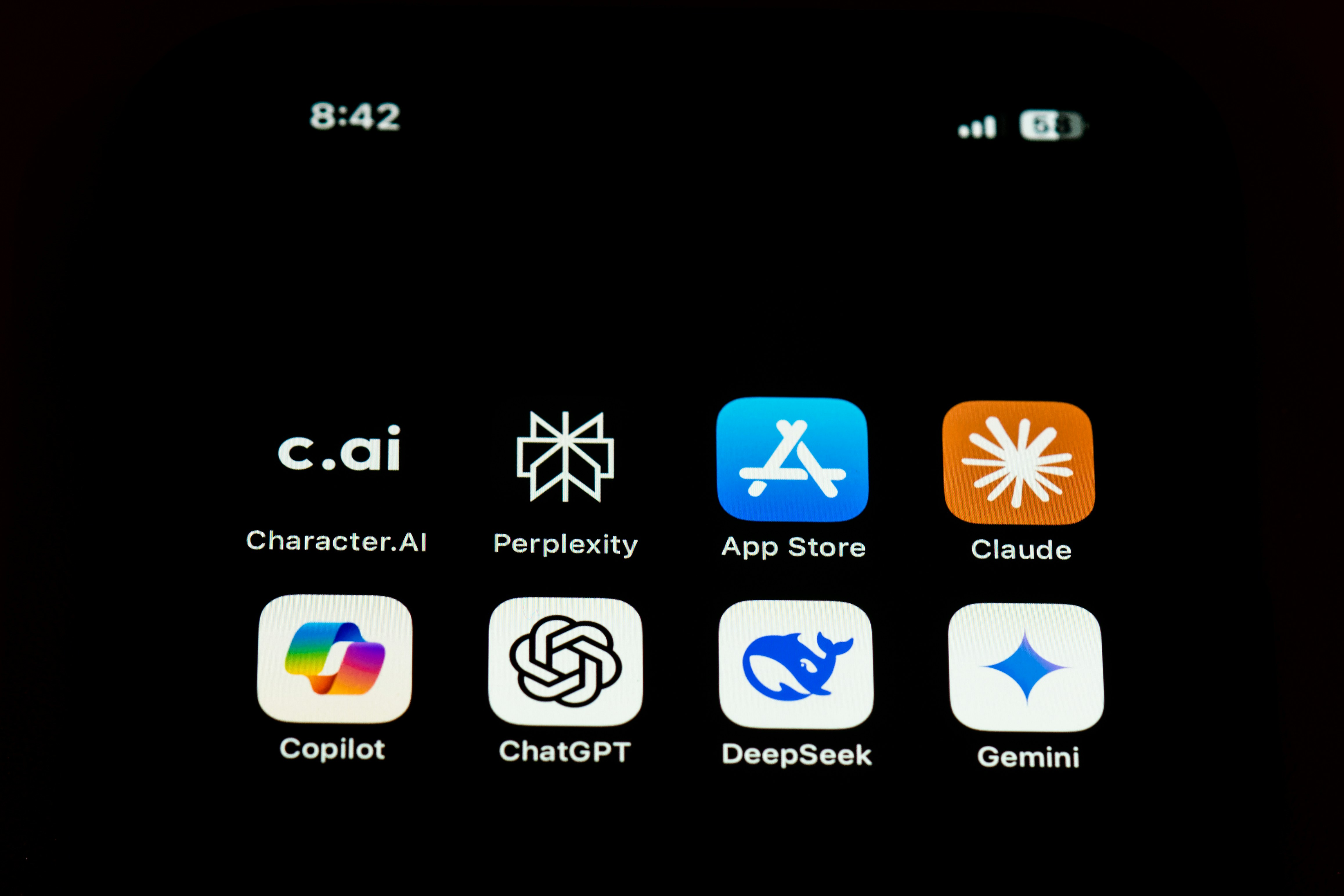

We’ll get into the particular numbers below, however the question is, which of the numerous technical innovations listed in the DeepSeek V3 report contributed most to its studying efficiency - i.e. model performance relative to compute used. Llama three 405B used 30.8M GPU hours for coaching relative to DeepSeek V3’s 2.6M GPU hours (extra data within the Llama three mannequin card). First, we have to contextualize the GPU hours themselves. Among the common and loud reward, there has been some skepticism on how much of this report is all novel breakthroughs, a la "did DeepSeek actually want Pipeline Parallelism" or "HPC has been doing any such compute optimization forever (or also in TPU land)". Now, build your first RAG Pipeline with Haystack components. With this version, we're introducing the primary steps to a completely honest evaluation and scoring system for supply code. Other firms which have been in the soup since the discharge of the newbie mannequin are Meta and Microsoft, as they have had their very own AI fashions Liama and Copilot, on which that they had invested billions, at the moment are in a shattered situation because of the sudden fall within the tech stocks of the US. For Chinese companies which are feeling the stress of substantial chip export controls, it cannot be seen as significantly shocking to have the angle be "Wow we can do method greater than you with less." I’d most likely do the same in their shoes, it is way more motivating than "my cluster is bigger than yours." This goes to say that we need to understand how necessary the narrative of compute numbers is to their reporting.

If you loved this article and you would love to receive details with regards to شات ديب سيك assure visit our web page.

댓글목록

등록된 댓글이 없습니다.