Better Call SAL

페이지 정보

작성자 Tania Decoteau 작성일25-02-14 12:35 조회107회 댓글0건본문

This leads us to Chinese AI startup DeepSeek. DeepSeek was founded lower than two years ago by the Chinese hedge fund High Flyer as a research lab dedicated to pursuing Artificial General Intelligence, or AGI. Similar cases have been noticed with different models, like Gemini-Pro, which has claimed to be Baidu's Wenxin when asked in Chinese. Some American AI researchers have solid doubt on DeepSeek’s claims about how a lot it spent, and how many superior chips it deployed to create its mannequin. Both DeepSeek and US AI firms have a lot more money and many more chips than they used to prepare their headline models. Rosie Campbell turns into the newest worried individual to depart OpenAI after concluding they will can’t have sufficient optimistic impact from the inside. At a supposed price of just $6 million to practice, DeepSeek’s new R1 model, released last week, was in a position to match the performance on several math and reasoning metrics by OpenAI’s o1 mannequin - the outcome of tens of billions of dollars in funding by OpenAI and its patron Microsoft. Now, hastily, it’s like, "Oh, OpenAI has a hundred million customers, and we want to build Bard and Gemini to compete with them." That’s a very completely different ballpark to be in.

And it’s simply the newest headwind for the group. T. Rowe Price Science and Technology equity technique portfolio manager Tony Wang instructed me he sees the group as "well positioned," while Stifel’s Ruben Roy additionally sees upside, citing DeepSeek’s R1 mannequin as a driver of world demand for sturdy and excessive-velocity networking infrastructure. Being excited about progress in science is something that we should all need, and seeing the cost of a crucial resource come down can also be one thing we should always need," explained Zack Kass, OpenAI’s former head of Go-To-Market. The Facebook/React staff have no intention at this point of fixing any dependency, as made clear by the fact that create-react-app is now not updated and so they now advocate different tools (see further down). Updated on 1st February - You need to use the Bedrock playground for understanding how the mannequin responds to numerous inputs and letting you superb-tune your prompts for optimum results. Cloud prospects will see these default fashions appear when their instance is up to date. The Guardian. Cite error: The named reference "vincent" was outlined multiple times with totally different content (see the help page). See my record of GPT achievements.

And it’s simply the newest headwind for the group. T. Rowe Price Science and Technology equity technique portfolio manager Tony Wang instructed me he sees the group as "well positioned," while Stifel’s Ruben Roy additionally sees upside, citing DeepSeek’s R1 mannequin as a driver of world demand for sturdy and excessive-velocity networking infrastructure. Being excited about progress in science is something that we should all need, and seeing the cost of a crucial resource come down can also be one thing we should always need," explained Zack Kass, OpenAI’s former head of Go-To-Market. The Facebook/React staff have no intention at this point of fixing any dependency, as made clear by the fact that create-react-app is now not updated and so they now advocate different tools (see further down). Updated on 1st February - You need to use the Bedrock playground for understanding how the mannequin responds to numerous inputs and letting you superb-tune your prompts for optimum results. Cloud prospects will see these default fashions appear when their instance is up to date. The Guardian. Cite error: The named reference "vincent" was outlined multiple times with totally different content (see the help page). See my record of GPT achievements.

After trying out the mannequin detail page including the model’s capabilities, and implementation guidelines, you possibly can directly deploy the mannequin by offering an endpoint title, choosing the variety of situations, and deciding on an occasion sort. The outcomes turned out to be better than the optimized kernels developed by skilled engineers in some circumstances. The experiment was to robotically generate GPU attention kernels that had been numerically appropriate and optimized for different flavors of consideration with none explicit programming. The level-1 fixing price in KernelBench refers to the numerical right metric used to guage the ability of LLMs to generate environment friendly GPU kernels for particular computational tasks. So far, China appears to have struck a practical stability between content management and quality of output, impressing us with its potential to keep up prime quality within the face of restrictions. Not to say Apple also makes the best mobile chips, so can have a decisive advantage operating local models too. It appears implausible, and I will test it for positive. Experts say the sluggish economy, high unemployment and Covid lockdowns have all played a job on this sentiment, whereas the Communist Party's tightening grip has additionally shrunk outlets for individuals to vent their frustrations.

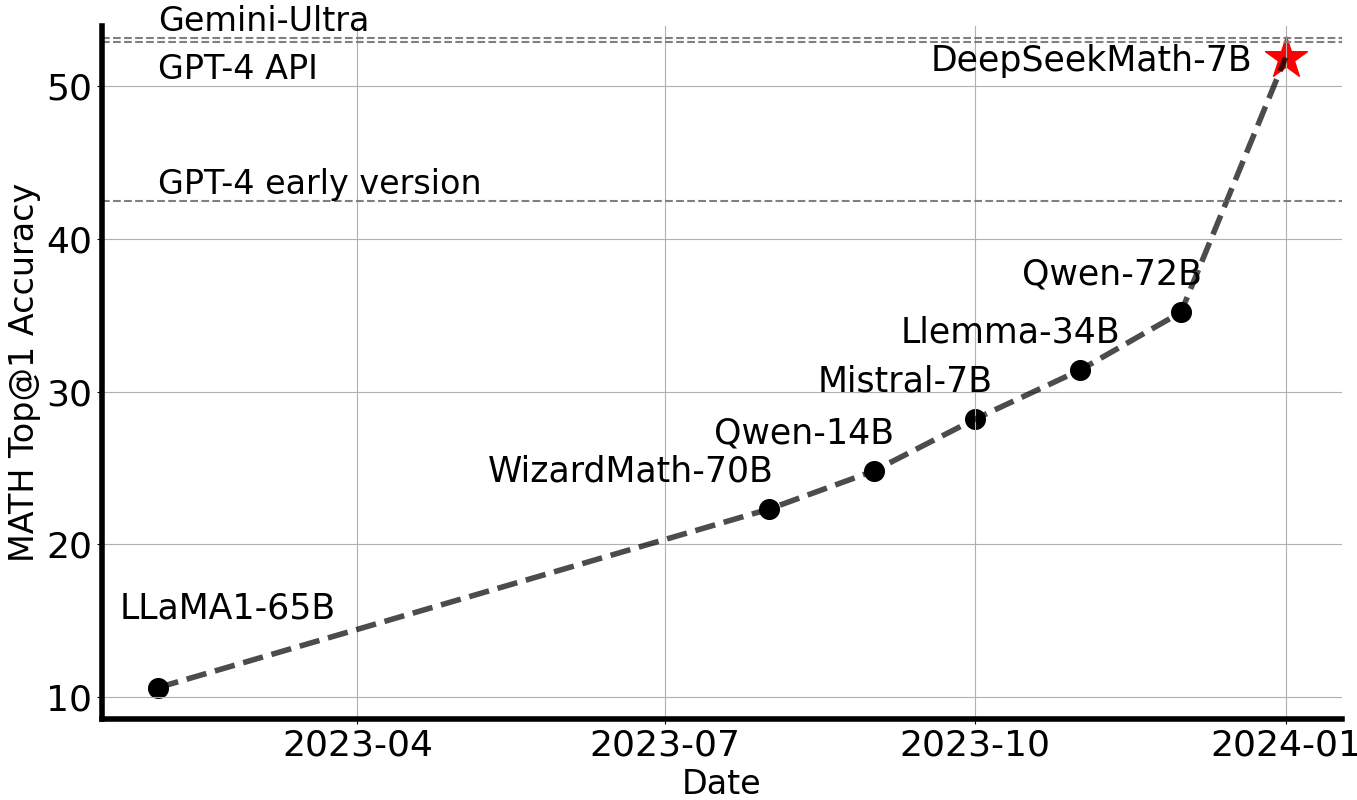

ChatGPT is a time period most persons are acquainted with. As the field of large language models for mathematical reasoning continues to evolve, the insights and methods presented in this paper are likely to inspire additional developments and contribute to the event of even more succesful and versatile mathematical AI methods. The researchers have developed a new AI system known as DeepSeek-Coder-V2 that goals to overcome the restrictions of present closed-supply models in the field of code intelligence. Do you use or have constructed another cool software or framework? Yep, AI enhancing the code to make use of arbitrarily large assets, positive, why not. The paper presents a brand new giant language model known as DeepSeekMath 7B that is particularly designed to excel at mathematical reasoning. Smoothquant: Accurate and efficient put up-coaching quantization for big language fashions. At the big scale, we train a baseline MoE mannequin comprising approximately 230B total parameters on round 0.9T tokens. We validate our FP8 mixed precision framework with a comparison to BF16 training on prime of two baseline fashions across different scales. A preferred technique for avoiding routing collapse is to pressure "balanced routing", i.e. the property that every skilled is activated roughly an equal variety of occasions over a sufficiently large batch, by including to the coaching loss a term measuring how imbalanced the knowledgeable routing was in a selected batch.

ChatGPT is a time period most persons are acquainted with. As the field of large language models for mathematical reasoning continues to evolve, the insights and methods presented in this paper are likely to inspire additional developments and contribute to the event of even more succesful and versatile mathematical AI methods. The researchers have developed a new AI system known as DeepSeek-Coder-V2 that goals to overcome the restrictions of present closed-supply models in the field of code intelligence. Do you use or have constructed another cool software or framework? Yep, AI enhancing the code to make use of arbitrarily large assets, positive, why not. The paper presents a brand new giant language model known as DeepSeekMath 7B that is particularly designed to excel at mathematical reasoning. Smoothquant: Accurate and efficient put up-coaching quantization for big language fashions. At the big scale, we train a baseline MoE mannequin comprising approximately 230B total parameters on round 0.9T tokens. We validate our FP8 mixed precision framework with a comparison to BF16 training on prime of two baseline fashions across different scales. A preferred technique for avoiding routing collapse is to pressure "balanced routing", i.e. the property that every skilled is activated roughly an equal variety of occasions over a sufficiently large batch, by including to the coaching loss a term measuring how imbalanced the knowledgeable routing was in a selected batch.

댓글목록

등록된 댓글이 없습니다.