How To enhance At Deepseek In 60 Minutes

페이지 정보

작성자 Modesto 작성일25-02-22 23:23 조회2회 댓글0건본문

Deepseek outperforms its competitors in several crucial areas, notably when it comes to size, flexibility, and API handling. DeepSeek-V2.5 was launched on September 6, 2024, and is available on Hugging Face with both net and API access. Try DeepSeek Chat: Spend a while experimenting with the free net interface. A paperless system would require important work up entrance, in addition to some extra coaching time for everyone, nevertheless it does pay off in the long term. But anyway, the myth that there's a primary mover advantage is nicely understood. " problem is addressed by means of de minimis standards, which usually is 25 % of the final worth of the product but in some instances applies if there is any U.S. Through continuous exploration of deep learning and natural language processing, DeepSeek has demonstrated its unique value in empowering content material creation - not only can it efficiently generate rigorous business evaluation, but also convey breakthrough innovations in creative fields such as character creation and narrative structure.

Deepseek outperforms its competitors in several crucial areas, notably when it comes to size, flexibility, and API handling. DeepSeek-V2.5 was launched on September 6, 2024, and is available on Hugging Face with both net and API access. Try DeepSeek Chat: Spend a while experimenting with the free net interface. A paperless system would require important work up entrance, in addition to some extra coaching time for everyone, nevertheless it does pay off in the long term. But anyway, the myth that there's a primary mover advantage is nicely understood. " problem is addressed by means of de minimis standards, which usually is 25 % of the final worth of the product but in some instances applies if there is any U.S. Through continuous exploration of deep learning and natural language processing, DeepSeek has demonstrated its unique value in empowering content material creation - not only can it efficiently generate rigorous business evaluation, but also convey breakthrough innovations in creative fields such as character creation and narrative structure.

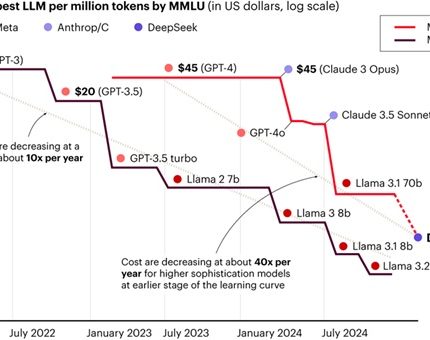

Expert recognition and praise: The brand new model has obtained significant acclaim from trade professionals and AI observers for its performance and capabilities. Since releasing DeepSeek R1-a large language model-this has changed and the tech business has gone haywire. Megacap tech companies were hit especially onerous. Liang Wenfeng: Major corporations' fashions could be tied to their platforms or ecosystems, whereas we're completely free. DeepSeek-V3 demonstrates aggressive performance, standing on par with prime-tier fashions corresponding to LLaMA-3.1-405B, GPT-4o, and Claude-Sonnet 3.5, whereas considerably outperforming Qwen2.5 72B. Moreover, DeepSeek-V3 excels in MMLU-Pro, a extra difficult academic data benchmark, where it carefully trails Claude-Sonnet 3.5. On MMLU-Redux, a refined version of MMLU with corrected labels, DeepSeek-V3 surpasses its peers. For environment friendly inference and economical training, DeepSeek-V3 also adopts MLA and DeepSeekMoE, which have been thoroughly validated by DeepSeek-V2. As well as, it doesn't have a constructed-in image technology operate and nonetheless throws some processing problems. The model is optimized for writing, instruction-following, and coding duties, introducing perform calling capabilities for external device interplay.

The models, which are available for obtain from the AI dev platform Hugging Face, are a part of a brand new mannequin family that DeepSeek is calling Janus-Pro. While most other Chinese AI corporations are happy with "copying" current open supply fashions, equivalent to Meta’s Llama, to develop their applications, Liang went further. In internal Chinese evaluations, DeepSeek-V2.5 surpassed GPT-4o mini and ChatGPT-4o-latest. Accessibility and licensing: DeepSeek-V2.5 is designed to be broadly accessible whereas maintaining sure ethical standards. Finding methods to navigate these restrictions whereas maintaining the integrity and performance of its fashions will help DeepSeek obtain broader acceptance and success in various markets. Its performance in benchmarks and third-celebration evaluations positions it as a robust competitor to proprietary fashions. Technical improvements: The mannequin incorporates advanced options to enhance efficiency and effectivity. The AI Model presents a suite of superior options that redefine our interaction with information, automate processes, and facilitate knowledgeable decision-making.

DeepSeek Ai Chat startled everyone last month with the claim that its AI model uses roughly one-tenth the amount of computing energy as Meta’s Llama 3.1 mannequin, upending an entire worldview of how much energy and resources it’ll take to develop synthetic intelligence. Actually, the explanation why I spent so much time on V3 is that that was the model that really demonstrated a lot of the dynamics that appear to be producing so much surprise and controversy. This breakthrough enables practical deployment of subtle reasoning models that traditionally require intensive computation time. GPTQ fashions for GPU inference, with a number of quantisation parameter options. DeepSeek’s models are acknowledged for his or her efficiency and cost-effectiveness. And Chinese firms are already selling their technologies via the Belt and Road Initiative and investments in markets that are often neglected by non-public Western traders. AI observer Shin Megami Boson confirmed it as the highest-performing open-source model in his personal GPQA-like benchmark.

댓글목록

등록된 댓글이 없습니다.