The Fundamental Facts Of Deepseek

페이지 정보

작성자 Frederick 작성일25-02-27 06:25 조회3회 댓글0건본문

That is the minimal bar that I expect very elite programmers must be striving for in the age of AI and DeepSeek must be studied as an example and this is the one just the first of many projects from them.There is an extremely excessive chance (in fact a 99.9% probability) that an AI didn't construct this and the ones who're able to construct or adapt initiatives like this that are deep into hardware systems will probably be the most sort after.Not the horrendous JS or even TS slop throughout GitHub that's extraordinarily simple for an AI to generate accurately.You've bought until 2030 to decide. Each professional has a corresponding knowledgeable vector of the identical dimension, and we decide which consultants will become activated by looking at which of them have the highest interior products with the current residual stream. This overlap ensures that, as the mannequin additional scales up, as long as we maintain a relentless computation-to-communication ratio, we will nonetheless make use of wonderful-grained specialists across nodes while reaching a near-zero all-to-all communication overhead. In distinction, a public API can (normally) even be imported into different packages. DeepSeek’s analysis paper means that both the most advanced chips are usually not needed to create high-performing AI models or that Chinese companies can still supply chips in sufficient portions - or a mixture of each.

That is the minimal bar that I expect very elite programmers must be striving for in the age of AI and DeepSeek must be studied as an example and this is the one just the first of many projects from them.There is an extremely excessive chance (in fact a 99.9% probability) that an AI didn't construct this and the ones who're able to construct or adapt initiatives like this that are deep into hardware systems will probably be the most sort after.Not the horrendous JS or even TS slop throughout GitHub that's extraordinarily simple for an AI to generate accurately.You've bought until 2030 to decide. Each professional has a corresponding knowledgeable vector of the identical dimension, and we decide which consultants will become activated by looking at which of them have the highest interior products with the current residual stream. This overlap ensures that, as the mannequin additional scales up, as long as we maintain a relentless computation-to-communication ratio, we will nonetheless make use of wonderful-grained specialists across nodes while reaching a near-zero all-to-all communication overhead. In distinction, a public API can (normally) even be imported into different packages. DeepSeek’s analysis paper means that both the most advanced chips are usually not needed to create high-performing AI models or that Chinese companies can still supply chips in sufficient portions - or a mixture of each.

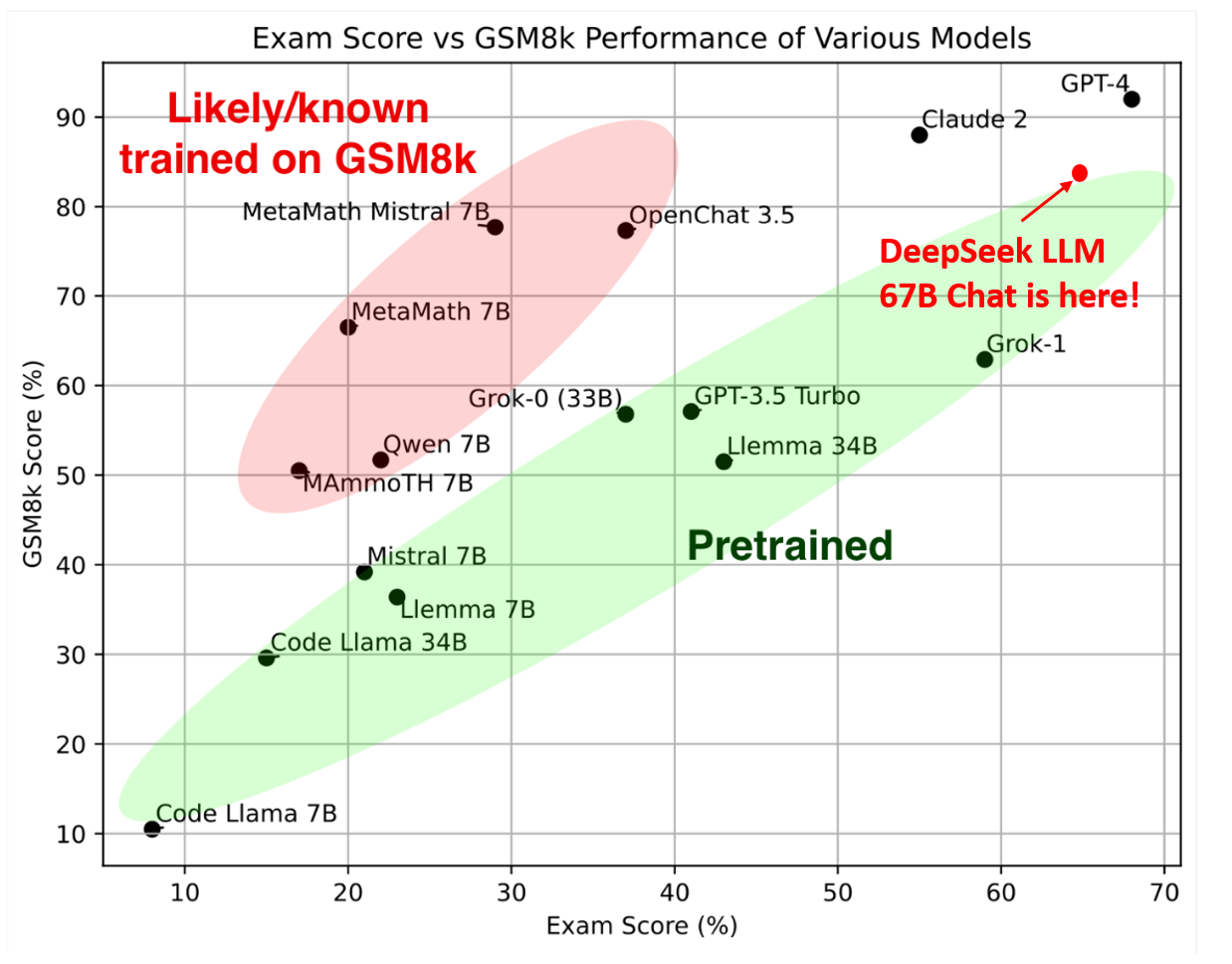

Tanishq Abraham, former analysis director at Stability AI, stated he was not surprised by China’s level of progress in AI given the rollout of assorted fashions by Chinese corporations reminiscent of Alibaba and Baichuan. Deepseek free is a Chinese AI startup specializing in creating open-source giant language fashions (LLMs), much like OpenAI. To further push the boundaries of open-supply mannequin capabilities, we scale up our fashions and introduce DeepSeek-V3, a big Mixture-of-Experts (MoE) mannequin with 671B parameters, of which 37B are activated for every token. The Sequence Chat: We discuss the challenges of interpretability within the period of mega large models. And most impressively, DeepSeek has launched a "reasoning model" that legitimately challenges OpenAI’s o1 model capabilities across a variety of benchmarks. Furthermore, these challenges will solely get more durable with the latest GPUs getting faster. Furthermore, we meticulously optimize the memory footprint, making it doable to train DeepSeek-V3 with out utilizing pricey tensor parallelism. During pre-training, we train DeepSeek-V3 on 14.8T excessive-quality and numerous tokens. Of their research paper, DeepSeek’s engineers mentioned that they had used about 2,000 Nvidia H800 chips, that are much less advanced than the most reducing-edge chips, to practice its mannequin. For the US government, DeepSeek’s arrival on the scene raises questions about its technique of attempting to comprise China’s AI advances by proscribing exports of high-finish chips.

Tanishq Abraham, former analysis director at Stability AI, stated he was not surprised by China’s level of progress in AI given the rollout of assorted fashions by Chinese corporations reminiscent of Alibaba and Baichuan. Deepseek free is a Chinese AI startup specializing in creating open-source giant language fashions (LLMs), much like OpenAI. To further push the boundaries of open-supply mannequin capabilities, we scale up our fashions and introduce DeepSeek-V3, a big Mixture-of-Experts (MoE) mannequin with 671B parameters, of which 37B are activated for every token. The Sequence Chat: We discuss the challenges of interpretability within the period of mega large models. And most impressively, DeepSeek has launched a "reasoning model" that legitimately challenges OpenAI’s o1 model capabilities across a variety of benchmarks. Furthermore, these challenges will solely get more durable with the latest GPUs getting faster. Furthermore, we meticulously optimize the memory footprint, making it doable to train DeepSeek-V3 with out utilizing pricey tensor parallelism. During pre-training, we train DeepSeek-V3 on 14.8T excessive-quality and numerous tokens. Of their research paper, DeepSeek’s engineers mentioned that they had used about 2,000 Nvidia H800 chips, that are much less advanced than the most reducing-edge chips, to practice its mannequin. For the US government, DeepSeek’s arrival on the scene raises questions about its technique of attempting to comprise China’s AI advances by proscribing exports of high-finish chips.

For the same motive, this expanded FDPR can even apply to exports of gear made by overseas-headquartered corporations, comparable to ASML of the Netherlands, Tokyo Electron of Japan, and SEMES of South Korea. The new SME FDPR and Entity List FDPR for Footnote 5 entities take the logic underpinning the second strategy and lengthen it additional. Based on our evaluation, the acceptance fee of the second token prediction ranges between 85% and 90% throughout varied generation topics, demonstrating consistent reliability. Here, we see a clear separation between Binoculars scores for human and AI-written code for all token lengths, with the expected result of the human-written code having a better rating than the AI-written. 0.9 per output token in comparison with GPT-4o's $15. Despite its low worth, it was profitable in comparison with its cash-shedding rivals. Despite its economical training costs, comprehensive evaluations reveal that DeepSeek-V3-Base has emerged because the strongest open-supply base mannequin currently obtainable, particularly in code and math. State-of-the-Art performance among open code fashions. These two architectures have been validated in DeepSeek-V2 (DeepSeek-AI, 2024c), demonstrating their functionality to maintain strong mannequin efficiency whereas achieving efficient coaching and inference. I actually do. Two years ago, I wrote a brand new … The previous 2 years have also been great for analysis.

Abraham, the previous analysis director at Stability AI, mentioned perceptions could also be skewed by the fact that, unlike DeepSeek, corporations comparable to OpenAI have not made their most advanced models freely out there to the general public. Secondly, DeepSeek-V3 employs a multi-token prediction training objective, which we've got observed to enhance the general performance on evaluation benchmarks. With a forward-wanting perspective, we constantly strive for robust model efficiency and economical prices. Consequently, our pre-training stage is completed in lower than two months and prices 2664K GPU hours. Through the help for FP8 computation and storage, we obtain both accelerated training and lowered GPU reminiscence usage. At an economical price of only 2.664M H800 GPU hours, we complete the pre-training of DeepSeek-V3 on 14.8T tokens, producing the at the moment strongest open-supply base mannequin. If pursued, these efforts may yield a better evidence base for selections by AI labs and governments regarding publication selections and AI coverage extra broadly. 6 million coaching value, but they doubtless conflated DeepSeek-V3 (the base model released in December final 12 months) and DeepSeek-R1.

If you liked this article and you would like to receive more details concerning DeepSeek Chat, hackmd.io, kindly pay a visit to the web-site.

댓글목록

등록된 댓글이 없습니다.