Need to Step Up Your Deepseek? You will Want To Read This First

페이지 정보

작성자 Otto 작성일25-03-01 15:46 조회4회 댓글1건본문

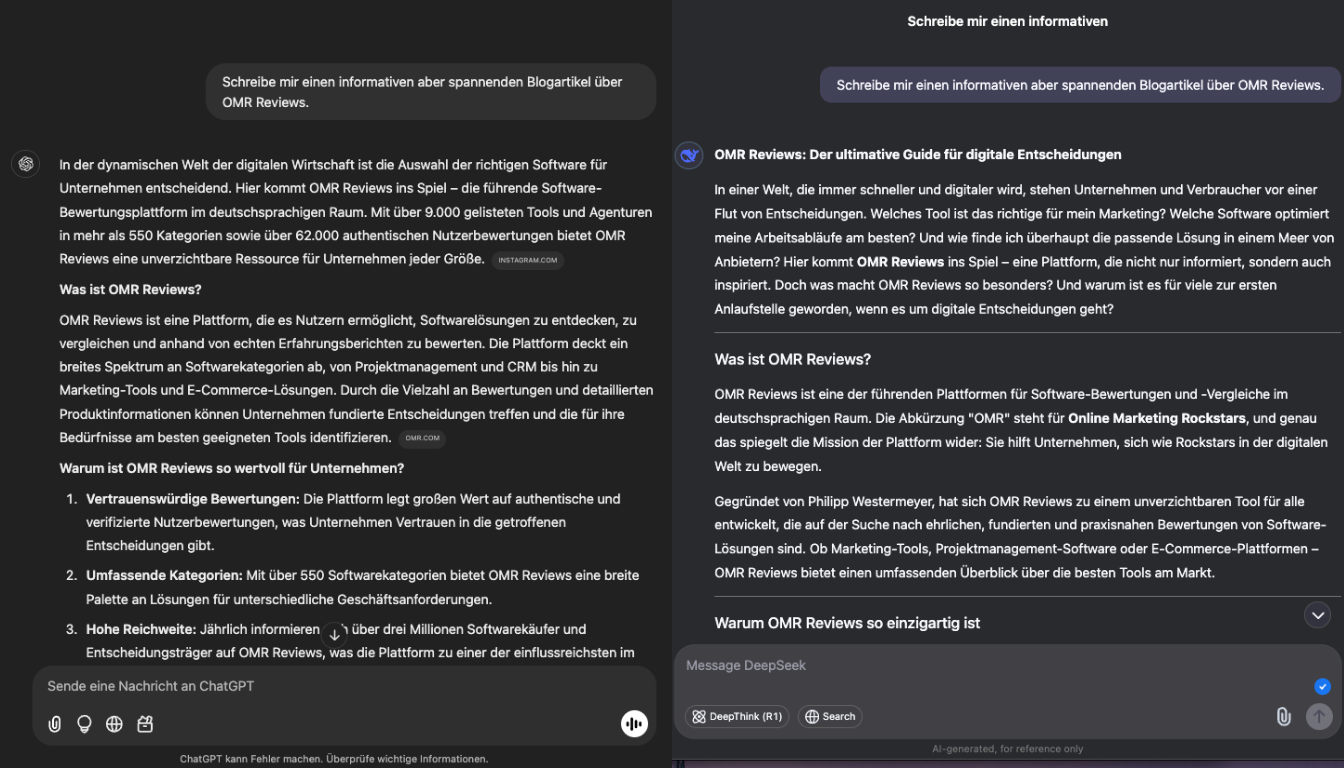

DeepSeek has been a sizzling topic at the end of 2024 and the start of 2025 due to two specific AI models. DeepSeek's models are "open weight", which offers less freedom for modification than true open supply software. As such, there already seems to be a brand new open source AI mannequin chief just days after the last one was claimed. This is cool. Against my non-public GPQA-like benchmark deepseek v2 is the precise finest performing open source mannequin I've tested (inclusive of the 405B variants). Cody is built on mannequin interoperability and we intention to supply entry to the very best and newest models, and in the present day we’re making an replace to the default models provided to Enterprise prospects. Today you could have numerous great choices for beginning fashions and beginning to eat them say your on a Macbook you should utilize the Mlx by apple or the llama.cpp the latter are also optimized for apple silicon which makes it an ideal choice. This would assist determine how a lot enchancment may be made, compared to pure RL and pure SFT, when RL is mixed with SFT.

Handling lengthy contexts: DeepSeek-Coder-V2 extends the context size from 16,000 to 128,000 tokens, permitting it to work with a lot bigger and extra complicated tasks. We’re going to need quite a lot of compute for a very long time, and "be extra efficient" won’t all the time be the answer. You'll need round four gigs Free Deepseek Online chat to run that one easily. I wish to keep on the ‘bleeding edge’ of AI, however this one got here faster than even I was prepared for. Any researcher can obtain and inspect one of those open-supply models and verify for themselves that it indeed requires much less power to run than comparable fashions. Here is how to use Mem0 to add a reminiscence layer to Large Language Models. Usage details can be found here. GPT-5 isn’t even ready but, and here are updates about GPT-6’s setup. I get pleasure from offering fashions and serving to people, and would love to be able to spend even more time doing it, as well as expanding into new initiatives like fine tuning/coaching.

Evaluation outcomes on the Needle In A Haystack (NIAH) exams. Best outcomes are proven in bold. The findings are sensational. After which there were the commentators who are literally value taking significantly, because they don’t sound as deranged as Gebru. Agree. My customers (telco) are asking for smaller fashions, much more focused on specific use circumstances, and distributed throughout the network in smaller devices Superlarge, expensive and generic fashions will not be that useful for the enterprise, even for chats. DeepSeek is a Chinese firm specializing in synthetic intelligence (AI) and pure language processing (NLP), offering advanced instruments and models like DeepSeek-V3 for text generation, knowledge analysis, and extra. Here’s another favourite of mine that I now use even more than OpenAI! 2025 will likely be nice, so perhaps there will probably be even more radical modifications in the AI/science/software program engineering landscape. Reinforcement Learning: The model makes use of a extra refined reinforcement studying method, including Group Relative Policy Optimization (GRPO), which makes use of suggestions from compilers and take a look at cases, and a discovered reward mannequin to superb-tune the Coder. We pre-train DeepSeek Chat-V3 on 14.8 trillion diverse and excessive-high quality tokens, adopted by Supervised Fine-Tuning and Reinforcement Learning stages to fully harness its capabilities. A general use mannequin that combines advanced analytics capabilities with a vast thirteen billion parameter rely, enabling it to perform in-depth information evaluation and help complex choice-making processes.

댓글목록

Social Link - Ves님의 댓글

Social Link - V… 작성일

What Makes Online Casinos Remain a Global Phenomenon

Digital casinos have revolutionized the gambling world, delivering a unique kind of comfort and breadth that conventional establishments can