Dario Amodei - on DeepSeek and Export Controls

페이지 정보

작성자 Sonia Waterman 작성일25-03-05 12:32 조회1회 댓글0건본문

DeepSeek continues to be having a "main incident" in accordance with Isdown with fifty two customers reporting incidents with it in the final half-hour. And whereas I - Hello there, it’s Jacob Krol again - still don’t have entry, TechRadar’s Editor-at-Large, Lance Ulanoff, is now signed in and using DeepSeek AI on an iPhone, and he’s began chatting… DeepSeek nonetheless appears to be experiencing severe issues. The feedback got here through the question section of Apple's 2025 first-quarter earnings call when an analyst asked Cook about DeepSeek and Apple's view. It’s also attention-grabbing to notice that OpenAI’s feedback appear (possibly intentionally) imprecise on the kind(s) of IP right they intend to rely on in this dispute. It’s a gambit here, like in chess → I think this is just the beginning. It’s free, good at fetching the most recent data, and a stable choice for users. Besides considerations for customers instantly utilizing DeepSeek’s AI models operating on its own servers presumably in China, and governed by Chinese legal guidelines, what about the growing checklist of AI developers outside of China, together with in the U.S., which have both instantly taken on DeepSeek’s service, or hosted their own versions of the company’s open supply models? These fashions have been a quantum leap forward, that includes a staggering 236 billion parameters.

Given the velocity with which new AI massive language models are being developed at the moment it ought to be no shock that there is already a brand new Chinese rival to DeepSeek online. From my private perspective, it could already be unbelievable to achieve this level of generalization, and we aren't there but (see next level). We already see that pattern with Tool Calling fashions, nonetheless you probably have seen current Apple WWDC, you can consider usability of LLMs. Considering the safety and privateness concerns around DeepSeek AI, Lance asked if it can see all the pieces he varieties on his phone versus what is sent by way of the immediate box. Before Tim Cook commented at the moment, OpenAI CEO Sam Altman, Meta's Mark Zuckerberg, and many others have commented, which you'll be able to read earlier on this dwell weblog. It's Graham Barlow, Senior AI Editor on TechRadar taking over the DeepSeek Live blog. That is a part of a printed weblog post on the information that DeepSeek R1 was touchdown on Azure AI Foundry and GitHub.

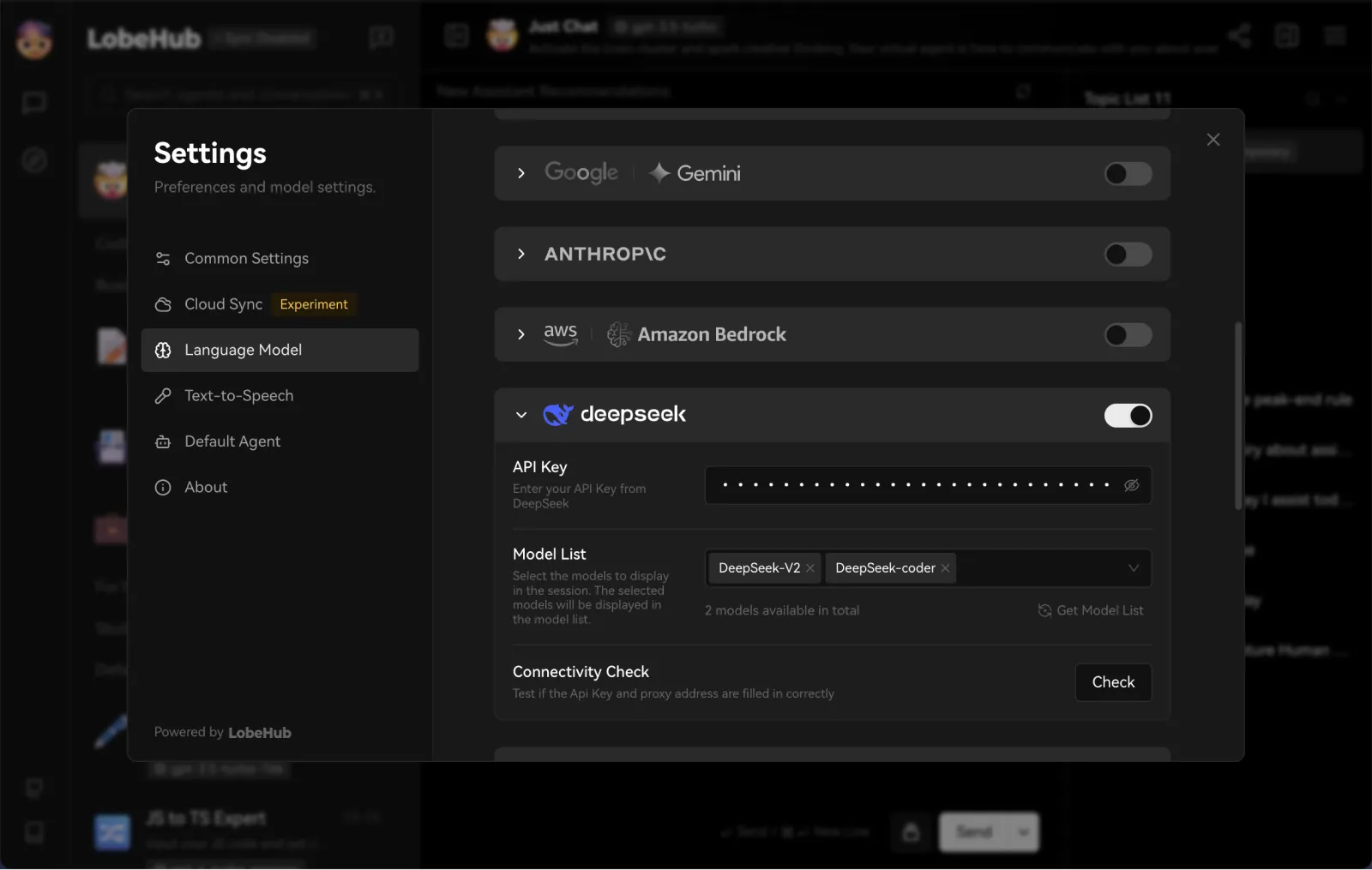

Microsoft is making some news alongside DeepSeek by rolling out the company's R1 mannequin, which has taken the AI world by storm prior to now few days, to the Azure AI Foundry platform and GitHub. As a pleasant little coda, I additionally had a chapter in Building God known as Being profitable. What is behind DeepSeek-Coder-V2, making it so particular to beat GPT4-Turbo, Claude-3-Opus, Gemini-1.5-Pro, Llama-3-70B and Codestral in coding and math? Deepseek is a standout addition to the AI world, combining advanced language processing with specialized coding capabilities. Overall, demand for AI capabilities remains strong. To the extent that rising the facility and capabilities of AI depend on more compute is the extent that Nvidia stands to profit! AI fashions. We're aware of and reviewing indications that DeepSeek could have inappropriately distilled our models, and can share information as we all know more. On the factual benchmark Chinese SimpleQA, DeepSeek-V3 surpasses Qwen2.5-72B by 16.4 points, despite Qwen2.5 being skilled on a bigger corpus compromising 18T tokens, that are 20% greater than the 14.8T tokens that DeepSeek-V3 is pre-skilled on.

Microsoft is making some news alongside DeepSeek by rolling out the company's R1 mannequin, which has taken the AI world by storm prior to now few days, to the Azure AI Foundry platform and GitHub. As a pleasant little coda, I additionally had a chapter in Building God known as Being profitable. What is behind DeepSeek-Coder-V2, making it so particular to beat GPT4-Turbo, Claude-3-Opus, Gemini-1.5-Pro, Llama-3-70B and Codestral in coding and math? Deepseek is a standout addition to the AI world, combining advanced language processing with specialized coding capabilities. Overall, demand for AI capabilities remains strong. To the extent that rising the facility and capabilities of AI depend on more compute is the extent that Nvidia stands to profit! AI fashions. We're aware of and reviewing indications that DeepSeek could have inappropriately distilled our models, and can share information as we all know more. On the factual benchmark Chinese SimpleQA, DeepSeek-V3 surpasses Qwen2.5-72B by 16.4 points, despite Qwen2.5 being skilled on a bigger corpus compromising 18T tokens, that are 20% greater than the 14.8T tokens that DeepSeek-V3 is pre-skilled on.

What we knew from the announcement is that smaller versions of R1 would arrive on these Pc sorts, and now we're studying a bit extra. The primary is DeepSeek-R1-Distill-Qwen-1.5B, which is out now in Microsoft's AI Toolkit for Developers. If we were utilizing the pipeline to generate functions, we might first use an LLM (GPT-3.5-turbo) to determine individual functions from the file and extract them programmatically. You'll first want a Qualcomm Snapdragon X-powered machine after which roll out to Intel and AMD AI chipsets. Note again that x.x.x.x is the IP of your machine internet hosting the ollama docker container. TechRadar's Matt Hanson created a Windows eleven virtual machine to make use of DeepSeek AI within a sandbox. Our full information, which incorporates step-by-step instructions for creating a Windows eleven digital machine, can be found right here. A pdf of the article is here. That’s what we got our author Eric Hal Schwartz to have a look at in a new article on our site that’s simply gone dwell. This has to be excellent news for everybody who hasn't received a DeepSeek account but, but would like to try it to seek out out what the fuss is all about. You may attempt Qwen2.5-Max yourself utilizing the freely available Qwen Chatbot.

For those who have just about any queries about exactly where and also how to utilize deepseek français, you are able to e mail us from the website.

댓글목록

등록된 댓글이 없습니다.