Deepseek Ai News At A Look

페이지 정보

작성자 Marta 작성일25-03-09 23:29 조회4회 댓글0건본문

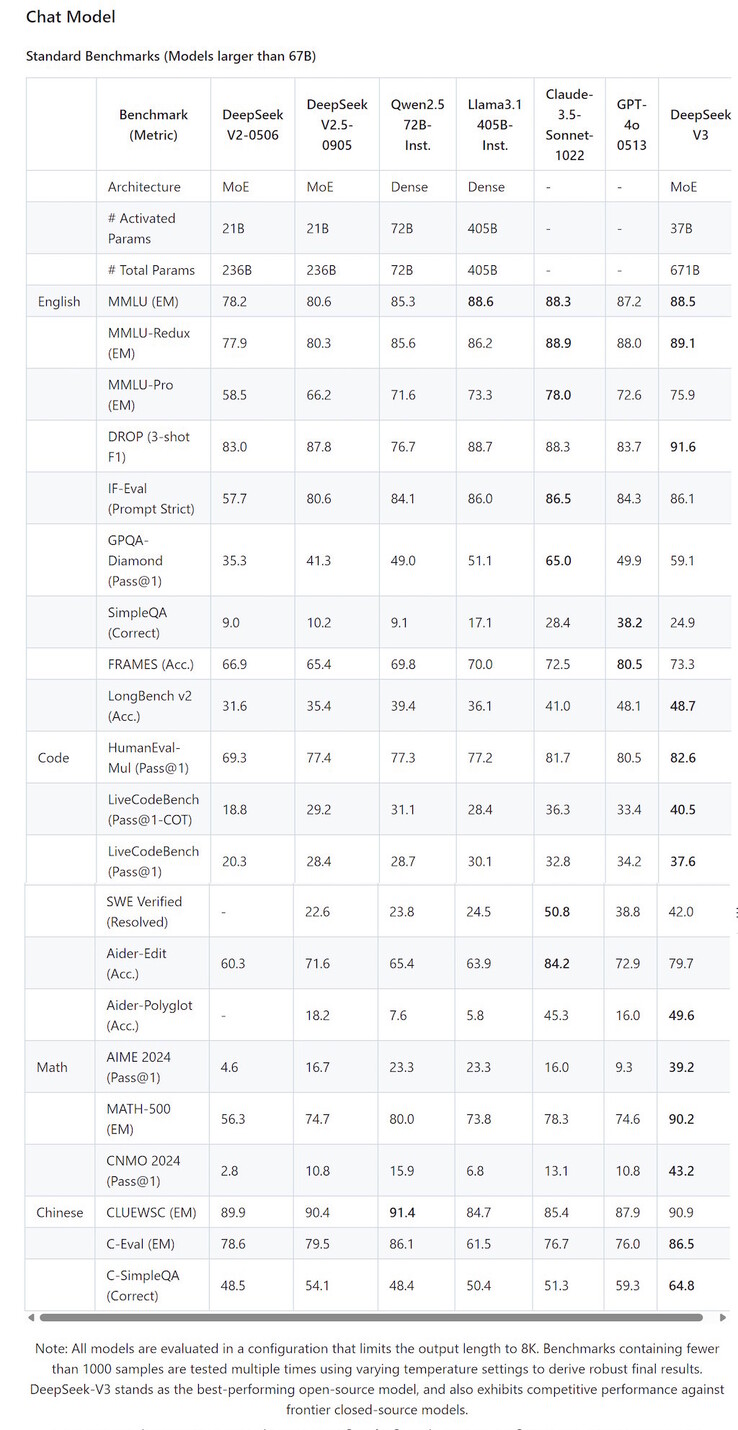

While different Chinese firms have launched massive-scale AI models, DeepSeek is one of the only ones that has efficiently broken into the U.S. DeepSeek Ai Chat R1 isn’t the best AI on the market. Despite our promising earlier findings, our closing results have lead us to the conclusion that Binoculars isn’t a viable technique for this task. Previously, we had used CodeLlama7B for calculating Binoculars scores, however hypothesised that using smaller models might improve efficiency. For example, R1 may use English in its reasoning and response, even when the prompt is in a completely totally different language. Select the model you would like to use (reminiscent of Qwen 2.5 Plus, Max, or another choice). Let's explore some thrilling ways Qwen 2.5 AI can improve your workflow and creativity. These distilled models serve as an attention-grabbing benchmark, displaying how far pure supervised high-quality-tuning (SFT) can take a model without reinforcement studying. Chinese tech startup DeepSeek has come roaring into public view shortly after it launched a model of its artificial intelligence service that seemingly is on par with U.S.-based competitors like ChatGPT, however required far much less computing energy for training.

This is especially clear in laptops - there are far too many laptops with too little to distinguish them and too many nonsense minor points. That being stated, DeepSeek’s distinctive points around privateness and censorship might make it a much less interesting possibility than ChatGPT. One potential benefit is that it might reduce the variety of advanced chips and data centres wanted to prepare and improve AI fashions, but a potential draw back is the authorized and ethical issues that distillation creates, as it has been alleged that DeepSeek did it without permission. Qwen2.5-Max is just not designed as a reasoning mannequin like DeepSeek R1 or OpenAI’s o1. In latest LiveBench AI checks, this latest model surpassed OpenAI’s GPT-4o and DeepSeek-V3 relating to math issues, logical deductions, and drawback-solving. In a live-streamed occasion on X on Monday that has been viewed over six million occasions on the time of writing, Musk and three xAI engineers revealed Grok 3, the startup's newest AI mannequin. Can the latest AI DeepSeek v3 Beat ChatGPT? These are authorised marketplaces the place AI companies can purchase massive datasets in a regulated surroundings. Therefore, it was very unlikely that the models had memorized the files contained in our datasets.

This is especially clear in laptops - there are far too many laptops with too little to distinguish them and too many nonsense minor points. That being stated, DeepSeek’s distinctive points around privateness and censorship might make it a much less interesting possibility than ChatGPT. One potential benefit is that it might reduce the variety of advanced chips and data centres wanted to prepare and improve AI fashions, but a potential draw back is the authorized and ethical issues that distillation creates, as it has been alleged that DeepSeek did it without permission. Qwen2.5-Max is just not designed as a reasoning mannequin like DeepSeek R1 or OpenAI’s o1. In latest LiveBench AI checks, this latest model surpassed OpenAI’s GPT-4o and DeepSeek-V3 relating to math issues, logical deductions, and drawback-solving. In a live-streamed occasion on X on Monday that has been viewed over six million occasions on the time of writing, Musk and three xAI engineers revealed Grok 3, the startup's newest AI mannequin. Can the latest AI DeepSeek v3 Beat ChatGPT? These are authorised marketplaces the place AI companies can purchase massive datasets in a regulated surroundings. Therefore, it was very unlikely that the models had memorized the files contained in our datasets.

Additionally, in the case of longer information, the LLMs had been unable to capture all of the performance, so the resulting AI-written information have been typically stuffed with feedback describing the omitted code. Due to the poor performance at longer token lengths, here, we produced a new model of the dataset for each token length, during which we only kept the functions with token length at the least half of the goal number of tokens. However, this difference turns into smaller at longer token lengths. However, its source code and any specifics about its underlying information usually are not out there to the general public. These are solely two benchmarks, noteworthy as they may be, and only time and plenty of screwing around will tell simply how properly these results hold up as extra individuals experiment with the mannequin. The V3 mannequin has upgraded algorithm architecture and delivers outcomes on par with different large language fashions. This pipeline automated the technique of producing AI-generated code, allowing us to shortly and simply create the big datasets that had been required to conduct our research. With the supply of the issue being in our dataset, the obvious solution was to revisit our code technology pipeline.

![]() In Executive Order 46, the Governor referred to as again to a earlier govt order by which he banned TikTok and different ByteDance-owned properties from being used on state-issued units. AI engineers demonstrated how Grok 3 could possibly be used to create code for an animated 3D plot of a spacecraft launch that began on Earth, landed on Mars, and came back to Earth. Because it showed higher performance in our initial research work, we started using DeepSeek as our Binoculars mannequin. With our datasets assembled, we used Binoculars to calculate the scores for each the human and AI-written code. The original Binoculars paper identified that the number of tokens within the enter impacted detection performance, so we investigated if the same utilized to code. They provide an API to make use of their new LPUs with quite a few open source LLMs (together with Llama three 8B and 70B) on their GroqCloud platform. Qwen AI is quickly becoming the go-to solution for the builders out there, and it’s very simple to know the way to use Qwen 2.5 max.

In Executive Order 46, the Governor referred to as again to a earlier govt order by which he banned TikTok and different ByteDance-owned properties from being used on state-issued units. AI engineers demonstrated how Grok 3 could possibly be used to create code for an animated 3D plot of a spacecraft launch that began on Earth, landed on Mars, and came back to Earth. Because it showed higher performance in our initial research work, we started using DeepSeek as our Binoculars mannequin. With our datasets assembled, we used Binoculars to calculate the scores for each the human and AI-written code. The original Binoculars paper identified that the number of tokens within the enter impacted detection performance, so we investigated if the same utilized to code. They provide an API to make use of their new LPUs with quite a few open source LLMs (together with Llama three 8B and 70B) on their GroqCloud platform. Qwen AI is quickly becoming the go-to solution for the builders out there, and it’s very simple to know the way to use Qwen 2.5 max.

If you have any questions regarding where and ways to utilize deepseek français, you can contact us at the web site.

댓글목록

등록된 댓글이 없습니다.