Addmeto (Addmeto) @ Tele.ga

페이지 정보

작성자 Annette Rentoul 작성일25-03-10 04:21 조회6회 댓글1건본문

How much did DeepSeek r1 stockpile, smuggle, or innovate its manner round U.S. The perfect strategy to keep up has been r/LocalLLaMa. DeepSeek, however, simply demonstrated that another route is offered: heavy optimization can produce exceptional results on weaker hardware and with lower memory bandwidth; simply paying Nvidia extra isn’t the one method to make higher models. US stocks dropped sharply Monday - and chipmaker Nvidia lost nearly $600 billion in market worth - after a shock advancement from a Chinese synthetic intelligence firm, DeepSeek, threatened the aura of invincibility surrounding America’s technology trade. DeepSeek, yet to succeed in that stage, has a promising road forward in the sphere of writing assistance with AI, particularly in multilingual and technical contents. As the sector of code intelligence continues to evolve, papers like this one will play an important role in shaping the way forward for AI-powered instruments for developers and researchers. 2 or later vits, but by the time i saw tortoise-tts additionally succeed with diffusion I realized "okay this subject is solved now too.

How much did DeepSeek r1 stockpile, smuggle, or innovate its manner round U.S. The perfect strategy to keep up has been r/LocalLLaMa. DeepSeek, however, simply demonstrated that another route is offered: heavy optimization can produce exceptional results on weaker hardware and with lower memory bandwidth; simply paying Nvidia extra isn’t the one method to make higher models. US stocks dropped sharply Monday - and chipmaker Nvidia lost nearly $600 billion in market worth - after a shock advancement from a Chinese synthetic intelligence firm, DeepSeek, threatened the aura of invincibility surrounding America’s technology trade. DeepSeek, yet to succeed in that stage, has a promising road forward in the sphere of writing assistance with AI, particularly in multilingual and technical contents. As the sector of code intelligence continues to evolve, papers like this one will play an important role in shaping the way forward for AI-powered instruments for developers and researchers. 2 or later vits, but by the time i saw tortoise-tts additionally succeed with diffusion I realized "okay this subject is solved now too.

The purpose is to replace an LLM in order that it might clear up these programming tasks without being offered the documentation for the API modifications at inference time. The benchmark entails synthetic API function updates paired with programming duties that require utilizing the up to date performance, difficult the mannequin to cause concerning the semantic changes moderately than just reproducing syntax. This paper presents a new benchmark called CodeUpdateArena to judge how nicely massive language models (LLMs) can update their data about evolving code APIs, a crucial limitation of present approaches. However, the paper acknowledges some potential limitations of the benchmark. Furthermore, present knowledge editing techniques even have substantial room for enchancment on this benchmark. Further research can also be wanted to develop more effective strategies for enabling LLMs to update their information about code APIs. Last week, analysis firm Wiz found that an inside DeepSeek database was publicly accessible "inside minutes" of conducting a security verify.

After DeepSeek's app rocketed to the top of Apple's App Store this week, the Chinese AI lab grew to become the talk of the tech trade. What the new new Chinese AI product means - and what it doesn’t. COVID created a collective trauma that many Chinese are nonetheless processing. In K. Inui, J. Jiang, V. Ng, and X. Wan, editors, Proceedings of the 2019 Conference on Empirical Methods in Natural Language Processing and the 9th International Joint Conference on Natural Language Processing (EMNLP-IJCNLP), pages 5883-5889, Hong Kong, China, Nov. 2019. Association for Computational Linguistics. Because the demand for advanced large language fashions (LLMs) grows, so do the challenges associated with their deployment. The CodeUpdateArena benchmark represents an important step ahead in assessing the capabilities of LLMs within the code era area, and the insights from this research may also help drive the event of extra strong and adaptable models that can keep pace with the quickly evolving software program landscape. Overall, the CodeUpdateArena benchmark represents an vital contribution to the continued efforts to enhance the code era capabilities of massive language models and make them extra robust to the evolving nature of software development.

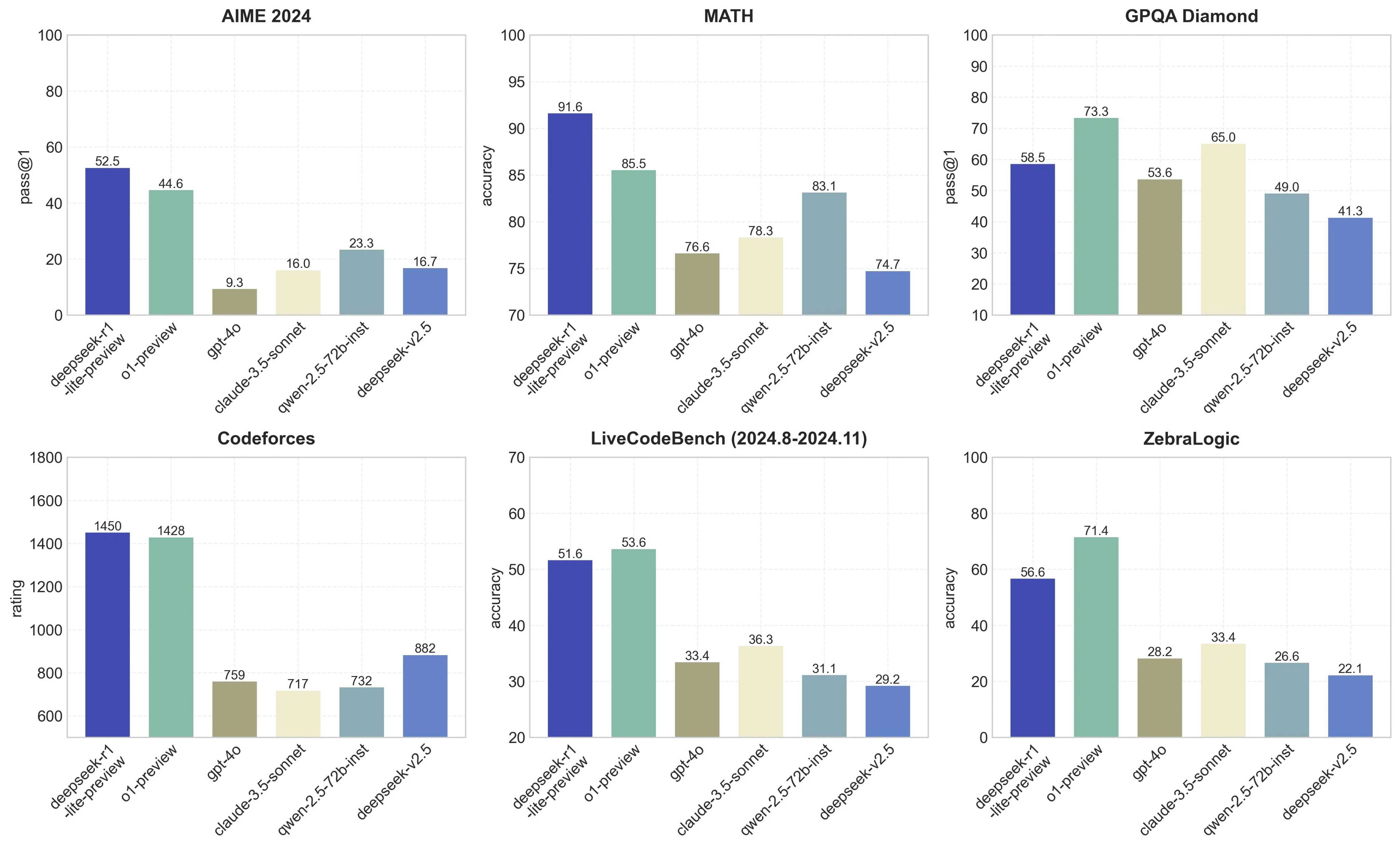

This paper examines how massive language fashions (LLMs) can be used to generate and purpose about code, however notes that the static nature of those fashions' knowledge doesn't mirror the truth that code libraries and APIs are continuously evolving. It is a Plain English Papers abstract of a research paper referred to as CodeUpdateArena: Benchmarking Knowledge Editing on API Updates. The paper presents a brand new benchmark known as CodeUpdateArena to test how well LLMs can replace their knowledge to handle changes in code APIs. The paper presents the CodeUpdateArena benchmark to check how properly massive language models (LLMs) can update their data about code APIs that are continuously evolving. By enhancing code understanding, era, and modifying capabilities, the researchers have pushed the boundaries of what large language models can obtain within the realm of programming and mathematical reasoning. The CodeUpdateArena benchmark represents an important step forward in evaluating the capabilities of giant language fashions (LLMs) to handle evolving code APIs, a critical limitation of present approaches. Livecodebench: Holistic and contamination Free DeepSeek Ai Chat analysis of large language fashions for code.

This paper examines how massive language fashions (LLMs) can be used to generate and purpose about code, however notes that the static nature of those fashions' knowledge doesn't mirror the truth that code libraries and APIs are continuously evolving. It is a Plain English Papers abstract of a research paper referred to as CodeUpdateArena: Benchmarking Knowledge Editing on API Updates. The paper presents a brand new benchmark known as CodeUpdateArena to test how well LLMs can replace their knowledge to handle changes in code APIs. The paper presents the CodeUpdateArena benchmark to check how properly massive language models (LLMs) can update their data about code APIs that are continuously evolving. By enhancing code understanding, era, and modifying capabilities, the researchers have pushed the boundaries of what large language models can obtain within the realm of programming and mathematical reasoning. The CodeUpdateArena benchmark represents an important step forward in evaluating the capabilities of giant language fashions (LLMs) to handle evolving code APIs, a critical limitation of present approaches. Livecodebench: Holistic and contamination Free DeepSeek Ai Chat analysis of large language fashions for code.

If you have any type of inquiries relating to where and how you can use DeepSeek Chat, you can call us at the website.