Unanswered Questions on Deepseek That You must Learn About

페이지 정보

작성자 Benjamin Rendal… 작성일25-03-10 10:51 조회4회 댓글0건본문

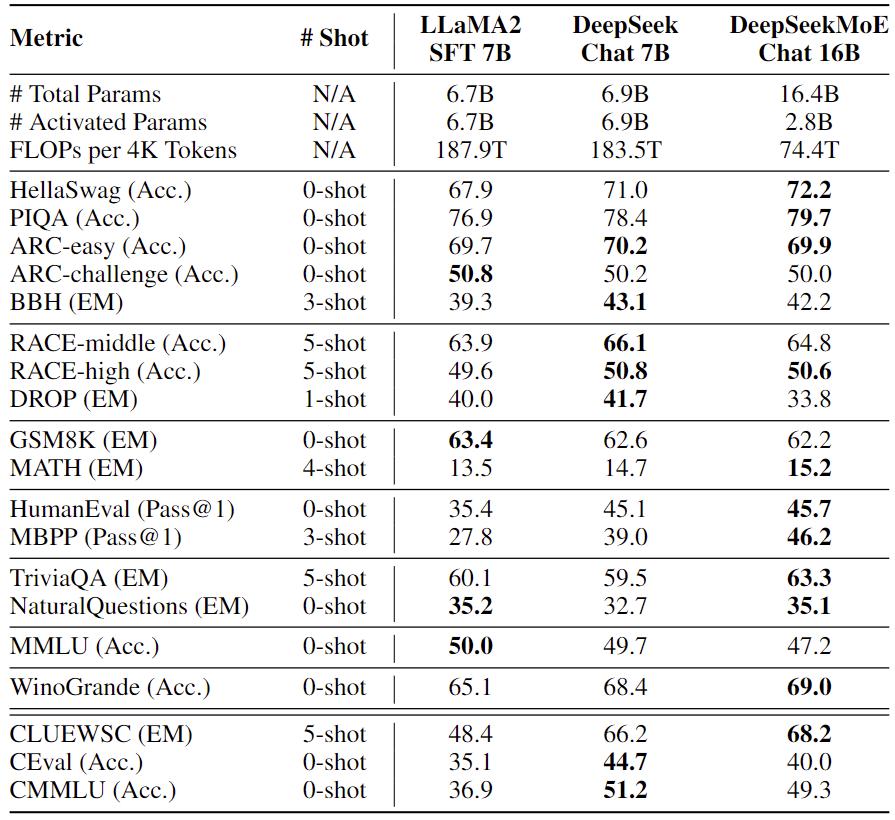

The Wall Street Journal reported that the DeepSeek app produces instructions for self-hurt and harmful activities extra typically than its American competitors. This makes the model sooner and more efficient. By having shared consultants, the model doesn't have to retailer the identical data in a number of locations. They handle widespread knowledge that multiple duties would possibly need. This strategy allows fashions to handle totally different facets of knowledge more successfully, bettering effectivity and scalability in giant-scale tasks. By implementing these methods, DeepSeekMoE enhances the efficiency of the model, allowing it to perform higher than other MoE fashions, particularly when dealing with larger datasets. It may very well be also value investigating if extra context for the boundaries helps to generate better tests. It’s attention-grabbing how they upgraded the Mixture-of-Experts architecture and a spotlight mechanisms to new versions, making LLMs extra versatile, value-efficient, and capable of addressing computational challenges, dealing with long contexts, and dealing very quickly. It’s AI democratization at its best. High throughput: DeepSeek V2 achieves a throughput that is 5.76 instances higher than DeepSeek 67B. So it’s able to producing text at over 50,000 tokens per second on standard hardware. It’s skilled on 60% source code, 10% math corpus, and 30% pure language.

What is behind DeepSeek-Coder-V2, making it so particular to beat GPT4-Turbo, Claude-3-Opus, Gemini-1.5-Pro, Llama-3-70B and Codestral in coding and math? The performance of DeepSeek r1-Coder-V2 on math and code benchmarks. But then they pivoted to tackling challenges as an alternative of just beating benchmarks. Testing DeepSeek-Coder-V2 on varied benchmarks shows that DeepSeek-Coder-V2 outperforms most fashions, including Chinese competitors. DeepSeek-Coder-V2, costing 20-50x times less than different fashions, represents a big upgrade over the unique DeepSeek-Coder, with more in depth coaching data, bigger and more environment friendly fashions, enhanced context dealing with, and superior techniques like Fill-In-The-Middle and Reinforcement Learning. This normally includes storing a lot of information, Key-Value cache or or KV cache, quickly, which might be slow and reminiscence-intensive. For instance, if in case you have a piece of code with one thing lacking within the middle, the mannequin can predict what ought to be there based on the encompassing code. On this instance, there’s plenty of smoke," Tsarynny stated. Lately, it has turn into best recognized because the tech behind chatbots resembling ChatGPT - and DeepSeek - also known as generative AI. AI chatbots are pc programmes which simulate human-model conversation with a user.

What is behind DeepSeek-Coder-V2, making it so particular to beat GPT4-Turbo, Claude-3-Opus, Gemini-1.5-Pro, Llama-3-70B and Codestral in coding and math? The performance of DeepSeek r1-Coder-V2 on math and code benchmarks. But then they pivoted to tackling challenges as an alternative of just beating benchmarks. Testing DeepSeek-Coder-V2 on varied benchmarks shows that DeepSeek-Coder-V2 outperforms most fashions, including Chinese competitors. DeepSeek-Coder-V2, costing 20-50x times less than different fashions, represents a big upgrade over the unique DeepSeek-Coder, with more in depth coaching data, bigger and more environment friendly fashions, enhanced context dealing with, and superior techniques like Fill-In-The-Middle and Reinforcement Learning. This normally includes storing a lot of information, Key-Value cache or or KV cache, quickly, which might be slow and reminiscence-intensive. For instance, if in case you have a piece of code with one thing lacking within the middle, the mannequin can predict what ought to be there based on the encompassing code. On this instance, there’s plenty of smoke," Tsarynny stated. Lately, it has turn into best recognized because the tech behind chatbots resembling ChatGPT - and DeepSeek - also known as generative AI. AI chatbots are pc programmes which simulate human-model conversation with a user.

ChatGPT requires an internet connection, but DeepSeek V3 can work offline in case you set up it in your laptop. To know why DeepSeek has made such a stir, it helps to start with AI and its capability to make a pc seem like a person. Why Choose DeepSeek App Download? Even when the community is configured to actively assault the mobile app (via a MITM attack), the app still executes these steps which permits both passive and active attacks against the information. Released on 10 January, DeepSeek-R1 surpassed ChatGPT as essentially the most downloaded freeware app on the iOS App Store within the United States by 27 January. Zahn, Max (27 January 2025). "Nvidia, Microsoft shares tumble as China-based mostly AI app DeepSeek hammers tech giants". Picchi, Aimee (27 January 2025). "What is DeepSeek, and why is it causing Nvidia and different stocks to slump?". This makes it more environment friendly because it would not waste assets on unnecessary computations. Transformer structure: At its core, DeepSeek-V2 makes use of the Transformer architecture, which processes text by splitting it into smaller tokens (like phrases or subwords) after which uses layers of computations to grasp the relationships between these tokens. Reinforcement Learning: The mannequin makes use of a extra subtle reinforcement learning method, together with Group Relative Policy Optimization (GRPO), which uses feedback from compilers and check instances, and a realized reward model to tremendous-tune the Coder.

ChatGPT requires an internet connection, but DeepSeek V3 can work offline in case you set up it in your laptop. To know why DeepSeek has made such a stir, it helps to start with AI and its capability to make a pc seem like a person. Why Choose DeepSeek App Download? Even when the community is configured to actively assault the mobile app (via a MITM attack), the app still executes these steps which permits both passive and active attacks against the information. Released on 10 January, DeepSeek-R1 surpassed ChatGPT as essentially the most downloaded freeware app on the iOS App Store within the United States by 27 January. Zahn, Max (27 January 2025). "Nvidia, Microsoft shares tumble as China-based mostly AI app DeepSeek hammers tech giants". Picchi, Aimee (27 January 2025). "What is DeepSeek, and why is it causing Nvidia and different stocks to slump?". This makes it more environment friendly because it would not waste assets on unnecessary computations. Transformer structure: At its core, DeepSeek-V2 makes use of the Transformer architecture, which processes text by splitting it into smaller tokens (like phrases or subwords) after which uses layers of computations to grasp the relationships between these tokens. Reinforcement Learning: The mannequin makes use of a extra subtle reinforcement learning method, together with Group Relative Policy Optimization (GRPO), which uses feedback from compilers and check instances, and a realized reward model to tremendous-tune the Coder.

DeepSeek-V2 is a state-of-the-artwork language model that uses a Transformer architecture mixed with an innovative MoE system and a specialized attention mechanism known as Multi-Head Latent Attention (MLA). DeepSeekMoE is a sophisticated model of the MoE structure designed to enhance how LLMs handle advanced tasks. The larger mannequin is more highly effective, and its architecture is predicated on DeepSeek's MoE strategy with 21 billion "energetic" parameters. Mixture-of-Experts (MoE): Instead of using all 236 billion parameters for each job, DeepSeek-V2 solely activates a portion (21 billion) based mostly on what it must do. DeepSeek-V2 introduces Multi-Head Latent Attention (MLA), a modified attention mechanism that compresses the KV cache right into a a lot smaller kind. DeepSeek has garnered significant media consideration over the previous few weeks, because it developed an synthetic intelligence model at a decrease value and with lowered energy consumption in comparison with rivals. Multi-Head Latent Attention (MLA): In a Transformer, attention mechanisms assist the mannequin concentrate on essentially the most related elements of the input. However, such a fancy giant mannequin with many concerned elements still has several limitations. Fill-In-The-Middle (FIM): One of the particular features of this model is its ability to fill in missing parts of code. DeepSeek’s capability to ship exact predictions and actionable insights has set it aside from competitors.

댓글목록

등록된 댓글이 없습니다.