Beware: 10 Deepseek Ai Mistakes

페이지 정보

작성자 Mavis 작성일25-03-16 11:15 조회6회 댓글2건본문

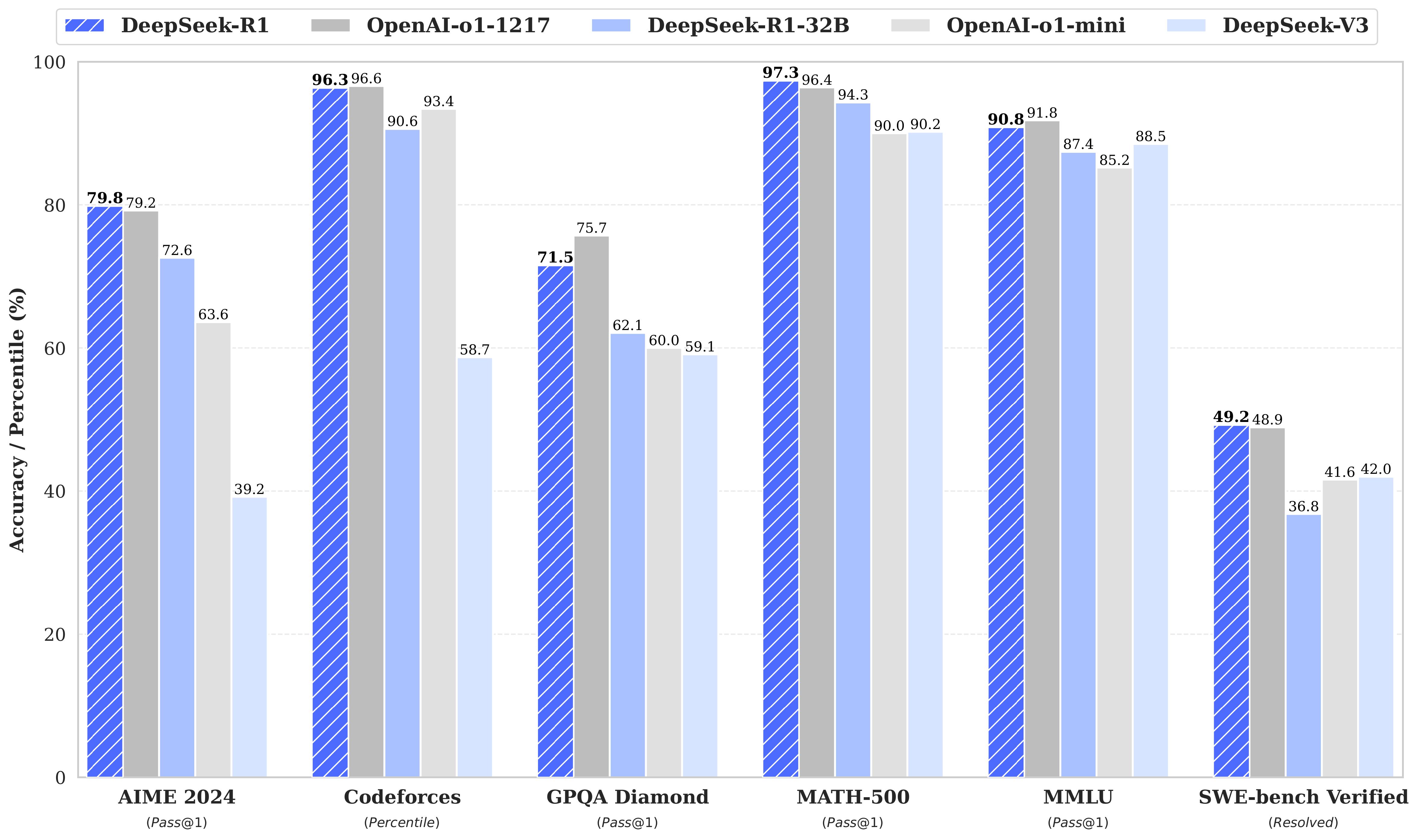

Ok so aside from the clear implication that DeepSeek is plotting to take over the world, one emoji at a time, its response was really fairly humorous, and slightly bit sarcastic. He can discuss your ear off about the game, and we might strongly advise you to steer clear of the topic until you too are a CS junkie. On this episode of The Vergecast, we speak about all these angles and some extra, as a result of DeepSeek is the story of the moment on so many ranges. Unlike ChatGPT, which aims to be a jack-of-all-trades, DeepSeek is hyper-focused on precision and effectivity, significantly in coding, math, and logic-based mostly tasks. ChatGPT: ChatGPT, on the other hand, brings a conversational and versatile touch to writing. I've found it notably useful when you will have a inventive writing process at your hand. Well, ChatGPT is designed to be a normal-function AI, which implies it may help you with almost anything-from casual chit-chat and brainstorming ideas to writing essays, summarizing paperwork, and even coding. They claimed Grok 3 had higher scores on math, science, and coding benchmark tests than OpenAI's GPT-4o, DeepSeek online's V3, and Google's Gemini AI.

Ok so aside from the clear implication that DeepSeek is plotting to take over the world, one emoji at a time, its response was really fairly humorous, and slightly bit sarcastic. He can discuss your ear off about the game, and we might strongly advise you to steer clear of the topic until you too are a CS junkie. On this episode of The Vergecast, we speak about all these angles and some extra, as a result of DeepSeek is the story of the moment on so many ranges. Unlike ChatGPT, which aims to be a jack-of-all-trades, DeepSeek is hyper-focused on precision and effectivity, significantly in coding, math, and logic-based mostly tasks. ChatGPT: ChatGPT, on the other hand, brings a conversational and versatile touch to writing. I've found it notably useful when you will have a inventive writing process at your hand. Well, ChatGPT is designed to be a normal-function AI, which implies it may help you with almost anything-from casual chit-chat and brainstorming ideas to writing essays, summarizing paperwork, and even coding. They claimed Grok 3 had higher scores on math, science, and coding benchmark tests than OpenAI's GPT-4o, DeepSeek online's V3, and Google's Gemini AI.

After i did a detailed DeepSeek Review, I threw some complicated coding problems its means, and it dealt with them with surprisingly impressive precision. AI chatbots have come a great distance, and the battle for the best assistant keeps getting hotter. If you’re asking who would "win" in a battle of wits, it’s a tie-we’re both here that will help you, simply in slightly other ways! Is DeepSeek a win for Apple? DeepSeek: DeepSeek excels at churning out full drafts at lightning velocity. DeepSeek: DeepSeek is a beast relating to generating full drafts quickly. ChatGPT: Excellent at generating creative, partaking, and pure-sounding text. Previous MathScholar article on ChatGPT: Here. ChatGPT: This is the place ChatGPT actually shines. Phones Monday. Based on the corporate's V3 model, it's the first Chinese AI chatbot that impressed Silicon Valley, performing on par or better than OpenAI's ChatGPT. As other US corporations like Meta panic over the swift takeover from this Chinese model that took less than $10 million to develop, Microsoft is taking one other method by teaming up with the enemy, bringing the DeepSeek-R1 mannequin to its personal Copilot PCs. I gave a immediate to DeepSeek to ‘write 5 stories about a man, a fox, and an elephant in 100 phrases.’ The primary two outputs were good, however then the mannequin started to repeat itself.

After i did a detailed DeepSeek Review, I threw some complicated coding problems its means, and it dealt with them with surprisingly impressive precision. AI chatbots have come a great distance, and the battle for the best assistant keeps getting hotter. If you’re asking who would "win" in a battle of wits, it’s a tie-we’re both here that will help you, simply in slightly other ways! Is DeepSeek a win for Apple? DeepSeek: DeepSeek excels at churning out full drafts at lightning velocity. DeepSeek: DeepSeek is a beast relating to generating full drafts quickly. ChatGPT: Excellent at generating creative, partaking, and pure-sounding text. Previous MathScholar article on ChatGPT: Here. ChatGPT: This is the place ChatGPT actually shines. Phones Monday. Based on the corporate's V3 model, it's the first Chinese AI chatbot that impressed Silicon Valley, performing on par or better than OpenAI's ChatGPT. As other US corporations like Meta panic over the swift takeover from this Chinese model that took less than $10 million to develop, Microsoft is taking one other method by teaming up with the enemy, bringing the DeepSeek-R1 mannequin to its personal Copilot PCs. I gave a immediate to DeepSeek to ‘write 5 stories about a man, a fox, and an elephant in 100 phrases.’ The primary two outputs were good, however then the mannequin started to repeat itself.

Using the identical prompt, ChatGPT gave approach higher storylines and ideas (nearly stored me on edge in some of them). In reality I asked ChatGPT the same query and the change was relatively extra bland. It provides extra detailed and uncensored data, making it appropriate for users seeking unbiased and straightforward responses. This transparency offers a big benefit, especially for academic communities and technical teams. While ChatGPT has dominated the space for some time, DeepSeek has entered the scene with a promise of precision, efficiency, and technical prowess. DeepSeek: When it comes to technical tasks, DeepSeek takes the sting. I practically forgot Copilot existed, because it takes an inordinate period of time to load on my laptop, but for science I had to ask. The BBC takes a have a look at how the app works. Is the DeepSeek app free? DeepSeek AI: Free DeepSeek r1 and open-source, with lower operational costs. The joke's on us, you possibly can truly buy a Robot Army from Amazon Prime, with free one-day supply of course. You can follow Jen on Twitter @Jenbox360 for more Diablo fangirling and common moaning about British weather. It's response managed to be much more boring than ChatGPT's and it wouldn't even argue with me.

The success of DeepSeek, Mordy explains, was in focusing on inference effectivity, a software program course of that improves AI models’ ability to generate responses based mostly on current data, somewhat than the sheer computational energy required to course of vast quantities of recent information for a response. He asserted that the model’s skill to purpose from first principles helps it deliver better outcomes, not just in training but in practical, actual-world functions as well. So, our crew put both fashions to the check in real-world scenarios, and let’s simply say - the variations are bigger than you’d assume. Team up? Did DeepSeek just threaten me? ’s just say we’d probably workforce as much as take on a much bigger challenge as an alternative! DeepSeek startled everyone final month with the claim that its AI mannequin makes use of roughly one-tenth the amount of computing energy as Meta’s Llama 3.1 model, upending a whole worldview of how much power and assets it’ll take to develop synthetic intelligence.

댓글목록

Dannyanelf님의 댓글

Dannyanelf 작성일

Aviator - atq님의 댓글

Aviator - atq 작성일What