DeepSeek-Prover Uses Synthetic Data to Boost Theorem Proving In LLMs

페이지 정보

작성자 Kaylee 작성일25-03-18 04:45 조회1회 댓글0건본문

DeepSeek affords capabilities similar to ChatGPT, although their performance, accuracy, and effectivity may differ. While both are AI-base, DeepSeek and ChatGPT serve different purposes and develop with different capabilities. This will mean these consultants will get almost all of the gradient signals during updates and develop into higher while other specialists lag behind, and so the opposite consultants will proceed not being picked, producing a optimistic suggestions loop that ends in other consultants by no means getting chosen or skilled. These bias phrases should not updated via gradient descent but are instead adjusted all through coaching to ensure load balance: if a selected professional is just not getting as many hits as we expect it should, then we are able to barely bump up its bias time period by a hard and fast small quantity each gradient step until it does. This allowed me to grasp how these fashions are FIM-educated, at the least sufficient to place that coaching to make use of. However, in contrast to in a vanilla Transformer, we additionally feed this vector into a subsequent Transformer block, and we use the output of that block to make predictions concerning the second subsequent token. As we'd in a vanilla Transformer, we use the final residual stream vector to generate subsequent token probabilities by unembedding and softmax.

DeepSeek affords capabilities similar to ChatGPT, although their performance, accuracy, and effectivity may differ. While both are AI-base, DeepSeek and ChatGPT serve different purposes and develop with different capabilities. This will mean these consultants will get almost all of the gradient signals during updates and develop into higher while other specialists lag behind, and so the opposite consultants will proceed not being picked, producing a optimistic suggestions loop that ends in other consultants by no means getting chosen or skilled. These bias phrases should not updated via gradient descent but are instead adjusted all through coaching to ensure load balance: if a selected professional is just not getting as many hits as we expect it should, then we are able to barely bump up its bias time period by a hard and fast small quantity each gradient step until it does. This allowed me to grasp how these fashions are FIM-educated, at the least sufficient to place that coaching to make use of. However, in contrast to in a vanilla Transformer, we additionally feed this vector into a subsequent Transformer block, and we use the output of that block to make predictions concerning the second subsequent token. As we'd in a vanilla Transformer, we use the final residual stream vector to generate subsequent token probabilities by unembedding and softmax.

Is DeepSeek Safe to make use of? China. Unlike OpenAI’s fashions, which can be found solely to paying subscribers, DeepSeek R1 is Free DeepSeek v3 and accessible to everyone, making it a recreation-changer in the AI landscape. As the business mannequin behind traditional journalism has damaged down, most credible information is trapped behind paywalls, making it inaccessible to giant swaths of society that can’t afford the access. To see why, consider that any large language mannequin likely has a small quantity of information that it makes use of a lot, whereas it has lots of knowledge that it uses moderately infrequently. Management uses digital-surveillance instruments - together with location-tracking methods - to measure worker productiveness. DeepSeek also uses less memory than its rivals, finally decreasing the cost to perform tasks for users. AGI will enable good machines to bridge the gap between rote duties and novel ones whereby things are messy and sometimes unpredictable. DeepSeek v3 does so by combining a number of completely different innovations, each of which I'll talk about in flip.

Is DeepSeek Safe to make use of? China. Unlike OpenAI’s fashions, which can be found solely to paying subscribers, DeepSeek R1 is Free DeepSeek v3 and accessible to everyone, making it a recreation-changer in the AI landscape. As the business mannequin behind traditional journalism has damaged down, most credible information is trapped behind paywalls, making it inaccessible to giant swaths of society that can’t afford the access. To see why, consider that any large language mannequin likely has a small quantity of information that it makes use of a lot, whereas it has lots of knowledge that it uses moderately infrequently. Management uses digital-surveillance instruments - together with location-tracking methods - to measure worker productiveness. DeepSeek also uses less memory than its rivals, finally decreasing the cost to perform tasks for users. AGI will enable good machines to bridge the gap between rote duties and novel ones whereby things are messy and sometimes unpredictable. DeepSeek v3 does so by combining a number of completely different innovations, each of which I'll talk about in flip.

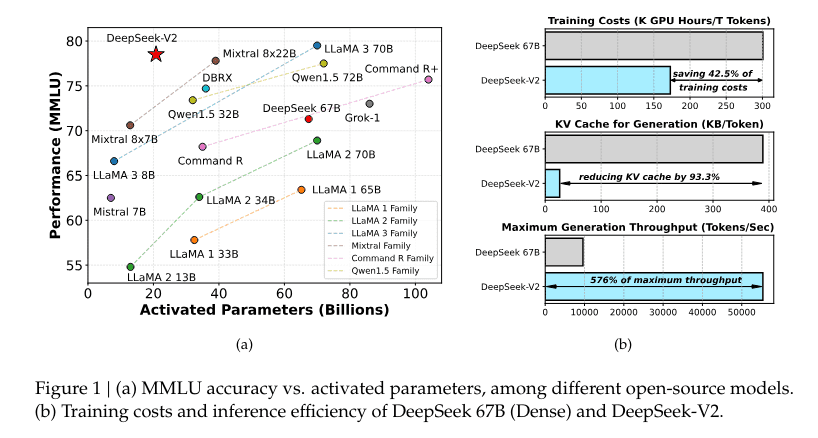

Figure 1: The DeepSeek v3 architecture with its two most vital improvements: DeepSeekMoE and multi-head latent attention (MLA). Figure 2: An illustration of multi-head latent attention from the DeepSeek v2 technical report. Exploiting the truth that completely different heads need entry to the identical info is essential for the mechanism of multi-head latent consideration. Their various is so as to add expert-particular bias terms to the routing mechanism which get added to the professional affinities. These models divide the feedforward blocks of a Transformer into multiple distinct consultants and add a routing mechanism which sends every token to a small quantity of these specialists in a context-dependent method. DeepSeek’s methodology primarily forces this matrix to be low rank: they pick a latent dimension and specific it as the product of two matrices, one with dimensions latent times model and another with dimensions (variety of heads · We can then shrink the size of the KV cache by making the latent dimension smaller. The private dataset is comparatively small at only a hundred duties, opening up the risk of probing for information by making frequent submissions. It additionally provides a reproducible recipe for creating coaching pipelines that bootstrap themselves by starting with a small seed of samples and producing increased-quality coaching examples as the models turn into extra capable.

UK small and medium enterprises selling on Amazon recorded over £3.Eight billion in export sales in 2023, and there are at present around 100,000 SMEs promoting on Amazon within the UK. Over the previous 5 years, she has labored with multiple enterprise clients to arrange a secure, scalable AI/ML platform constructed on SageMaker. Globally, cloud providers implemented multiple rounds of value cuts to draw extra businesses, which helped the trade scale and lower the marginal price of providers. DeepSeek-R1, or R1, is an open supply language model made by Chinese AI startup DeepSeek that may perform the same text-based mostly duties as other superior fashions, however at a decrease price. Because if something proves that we do not dwell in a bipolar world with cleanly demarcated traces between "us" and "them" - it's the hybrid fusion at the guts of the Chinese laptop. The problem with that is that it introduces a relatively ailing-behaved discontinuous function with a discrete picture at the heart of the model, in sharp contrast to vanilla Transformers which implement steady input-output relations.

If you have virtually any questions regarding in which and how you can utilize deepseek français, you possibly can contact us on our internet site.

댓글목록

등록된 댓글이 없습니다.